Robot experiment shows people trust robots more when robots explain what they are doing

A team of researchers from the University of California Los Angeles and the California Institute of Technology has found via experimentation that humans tend to trust robots more when they communicate what they are doing. In their paper published in the journal Science Advances, the group describes programming a robot to report what it was doing in different ways and then showed it in action to volunteers.

As robots become more advanced, they are expected to become more common—we may wind up interacting with one or more of them on a daily basis. Such a scenario makes some people uneasy—the thought of interacting or working with a machine that not only carries out specific assignments, but does so in seemingly intelligent ways might seem a little off-putting. Scientists have suggested one way to reduce the anxiety people experience when working with robots is to have the robots explain what they are doing.

As robots become more advanced, they are expected to become more common—we may wind up interacting with one or more of them on a daily basis. Such a scenario makes some people uneasy—the thought of interacting or working with a machine that not only carries out specific assignments, but does so in seemingly intelligent ways might seem a little off-putting. Scientists have suggested one way to reduce the anxiety people experience when working with robots is to have the robots explain what they are doing.

In this new effort, the researchers noted that most work being done with robots is focused on getting a task done—little is being done to promote harmony between robots and humans. To find out if having a robot simply explain what it is doing might reduce anxiety in humans, the researchers taught a robot to unscrew a medicine cap—and to explain what it was doing as it carried out its task in three different ways.

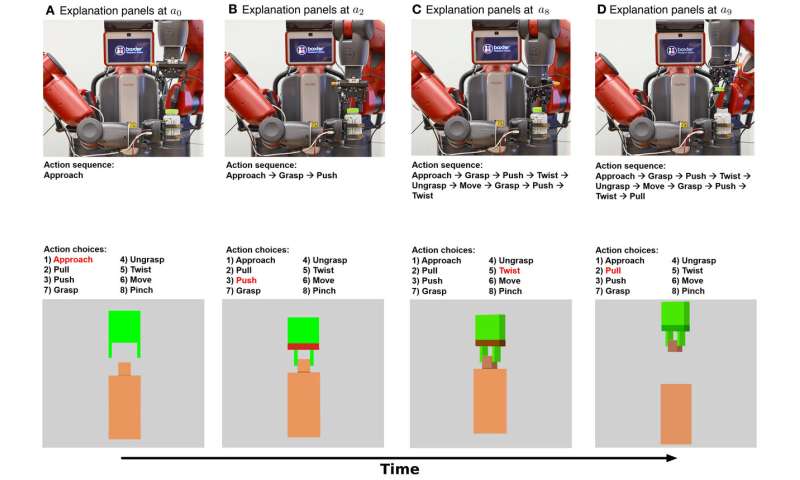

The first type of explanation was called symbolic, or mechanistic, and it involved having the robot display on a video screen each of the actions it was performing as part of a string of actions, e.g. grasp, push or twist. The second type of explanation was called haptic, or functional. It involved displaying the general function that was being performed as a robot went about each step in a task, such as approaching, pushing, twisting or pulling. Volunteers who watched the robot in action were also shown a simple text message that described what the robot was going to do.

The researchers then asked 150 volunteers to watch as the robot opened a medicine bottle along with accompanying explanations. The researchers report that the volunteers gave the highest trust ratings when they were shown both the haptic and symbolic explanations. The lowest ratings came from those who saw just the text message. The researchers suggest their experiment showed that humans are more likely to trust a robot if they are given enough information about what the robot is doing. They say the next step is to teach robots to report why they are performing an action.

AI's future potential hinges on consensus: NAM report

The role of artificial intelligence, or machine learning, will be pivotal as the industry wrestles with a gargantuan amount of data that could improve—or muddle—health and cost priorities, according to a National Academy of Medicine Special Publication on the use of AI in health care.

Yet, the current explosion of investment and development is happening without an underpinning of consensus of responsible, transparent deployment, which potentially constrains its potential.

The new report is designed to be a comprehensive reference for organizational leaders, health care professionals, data analysts, model developers and those who are working to integrate machine learning into health care, said Vanderbilt University Medical Center's Michael Matheny, MD, MS, MPH, Associate Professor in the Department of Biomedical Informatics, and co-editor of AI in Healthcare: The Hope, The Hype, The Promise, The Peril.

"It's critical for the health care community to learn from both the successes, but also the challenges and recent failures in use of these tools. We set out to catalog important examples in health care AI, highlight best practices around AI development and implementation, and offer key points that need to be discussed for consensus to be achieved on how to address them as an AI community and society," said Matheny.

Matheny underscores the applications in health care look nothing like the mass market imagery of self-driving cars that is often synonymous with machine learning or tech-driven systems.

For the immediate future in health care, AI should be thought of as a tool to support and complement the decision-making of highly trained professionals in delivering care in collaboration with patients and their goals, Matheny said.

Recent advances in deep learning and related technologies have met with great success in imaging interpretations, such as radiology and retina exams, which have spurred a rush toward AI development that brought first, venture capital funding, and then industry giants. However, some of the tools have had problems with bias from the populations they were developed from, or from the choice of an inappropriate target. Data analysts and developers need to work toward increased data access and standardization as well as thoughtful development so algorithms aren't biased against already marginalized patients.

The editors hope this report can contribute to the dialog of patient inclusivity and fairness in the use of AI tools, and the need for careful development, implementation, and surveillance of them to optimize their chance of success, Matheny said.

Matheny along with Stanford University School of Medicine's Sonoo Thadaney Israni, MBA, and Mathematica Policy Research's Danielle Whicher, Ph.D., MS, penned an accompanying piece for JAMA Network about the watershed moment in which the industry finds itself.

"AI has the potential to revolutionize health care. However, as we move into a future supported by technology together, we must ensure high data quality standards, that equity and inclusivity are always prioritized, that transparency is use-case-specific, that new technologies are supported by appropriate and adequate education and training, and that all technologies are appropriately regulated and supported by specific and tailored legislation," the National Academy of Medicine wrote in a release.

"I want people to use this report as a foil to hone the national discourse on a few key areas including education, equity in AI, uses that support human cognition rather than replacing it, and separating out AI transparency into data, algorithmic, and performance transparency," said Matheny.

More information: National Academy of Medicine Special Publication: nam.edu/artificial-intelligenc … special-publication/

Michael E. Matheny et al. Artificial Intelligence in Health Care, JAMA (2019). DOI: 10.1001/jama.2019.21579

Journal information: Journal of the American Medical Association

No comments:

Post a Comment