by Bob Yirka , Medical Xpress

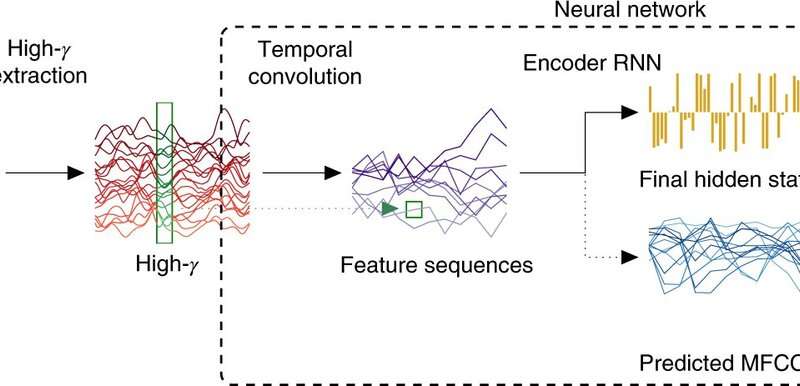

The decoding pipeline. Credit: Nature Neuroscience (2020). DOI: 10.1038/s41593-020-0608-8

The decoding pipeline. Credit: Nature Neuroscience (2020). DOI: 10.1038/s41593-020-0608-8A team of researchers at the University of California, San Francisco, has taken another step toward the development of a computer able to decipher speech in the human mind. In their paper published in the journal Nature Neuroscience, the group describes their approach to using AI systems to read and translate human thoughts. Gregory Cogan with Duke University has published a News & Views piece outlining the work by the team in California in the same journal issue.

Over the past century, people have wondered if it might be possible to create a machine that could read the human mind. Such ideas have most often been expressed in movies where scientists try to read the mind of a spy or terrorist. Recently, such systems have come to be seen as a possible way for people with speech disability to communicate. The advent of artificial intelligence, and more specifically neural networks, has brought the possibility ever closer, with machines able to read brain waves and translate some of them into words. In this new effort, the research team has taken the idea a step forward by developing a system able to decipher whole sentences.

The work by the team involved developing a more advanced AI system and recruiting the assistance of four women with epilepsy—each of whom had been fitted with brain-implanted electrodes to monitor their condition. The researchers used readings from the electrodes to capture brain signals in different parts of their brain as the women read sentences out loud. The data from the electrodes was sent to a neural network that processed the information linked certain brain signals to words as they were being processed and spoken by the volunteer. Each of the sentences was spoken twice by each of the volunteers, but only the first was used for training the neural network—the second was used for testing purposes. After processing the brain signal data, the first neural network sent the results to a second neural network that tried to form sentences from them.

The researchers found that their system had a best-case scenario error rate of just 3%. But they note that it was also working with a very limited vocabulary of just 250 words—far fewer than the hundreds of thousands that most humans are able to recognize. But they suggest it might be enough for someone who cannot speak any words at all.

Explore furtherAnother step toward creating brain-reading technology

More information: Joseph G. Makin et al. Machine translation of cortical activity to text with an encoder–decoder framework, Nature Neuroscience (2020). DOI: 10.1038/s41593-020-0608-8

No comments:

Post a Comment