The ‘least crazy’ idea: Early dark energy could solve a cosmological conundrum

Dan Falk

Thu, September 28, 2023

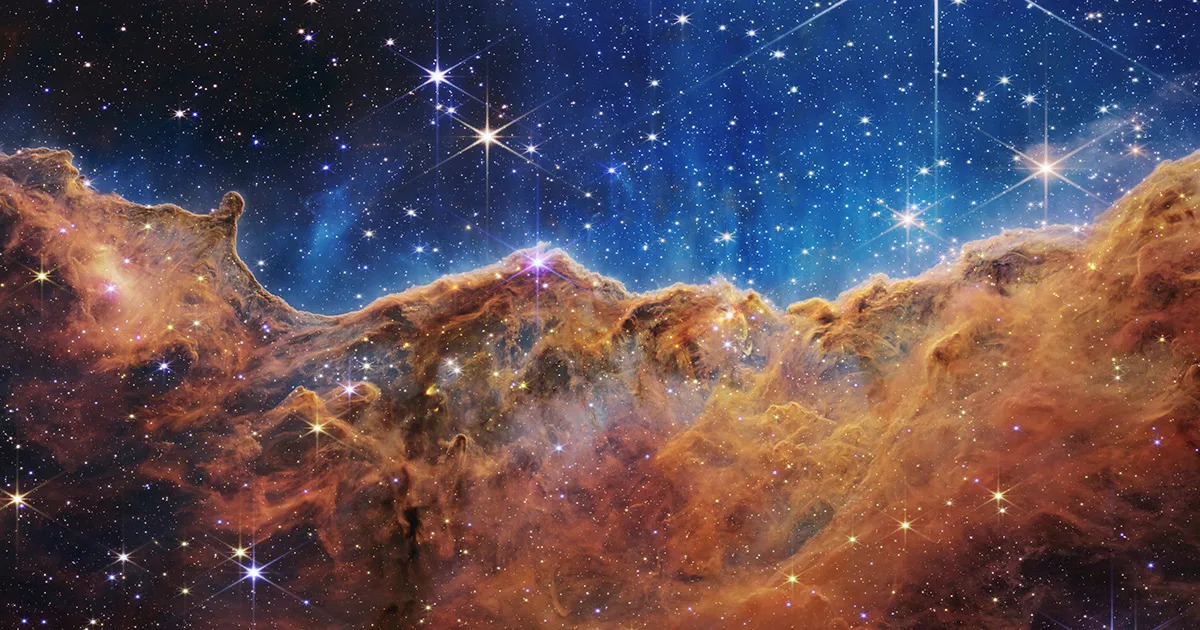

CREDITS: NASA, ESA, CSA, AND STSCI

At the heart of the Big Bang model of cosmic origins is the observation that the universe is expanding, something astronomers have known for nearly a century. And yet, determining just how fast the universe is expanding has been frustratingly difficult to accomplish. In fact, it’s worse than that: Using one type of measurement, based on the cosmic microwave background — radiation left over from the Big Bang — astronomers find one value for the universe’s expansion rate. A different type of measurement, based on observations of light from exploding stars called supernovas, yields another value. And the two numbers disagree.

As those measurements get more and more precise, that disagreement becomes harder and harder to explain. In recent years, the discrepancy has even been given a name — the “Hubble tension” — after the astronomer Edwin Hubble, one of the first to propose that the universe is expanding.

The universe’s current expansion rate is called the “Hubble constant,” designated by the symbol H0. Put simply, the Hubble constant can predict how fast two celestial objects — say, two galaxies at a given distance apart — will appear to move away from each other. Technically, this speed is usually expressed in the not-very-intuitive units of “kilometers per second per megaparsec.” That means that for every megaparsec (a little more than 3 million light-years — nearly 20 million trillion miles) separating two distant celestial objects, they will appear to fly apart at a certain speed (typically measured in kilometers per second).

For decades, astronomers argued about whether that speed (per megaparsec of separation) was close to 50 or closer to 100 kilometers per second. Today the two methods appear to yield values for the Hubble constant of about 68 km/s/mpc on the one hand and about 73 or 74 km/s/mpc on the other.

That may seem like an insignificant difference, but for astronomers, the discrepancy is a big deal: The Hubble constant is perhaps the most important number in all of cosmology. It informs scientists’ understanding of the origins and future of the cosmos, and reflects their best physics — anything amiss suggests there may be missing pieces in that physics. Both of the measurements now come with fairly narrow margins of error, so the two figures, as close as they may seem, are a source of conflict.

Another source of consternation is the physics driving the cosmic expansion — especially following the 1998 discovery of a myserious entity dubbed “dark energy.”

In the Big Bang model, spacetime began expanding some 13.8 billion years ago. Later, galaxies formed, and the expansion carried those galaxies along with it, making them rush away from one another. But gravity causes matter to attract matter, which ought to slow that outward expansion, and eventually maybe even make those galaxies reverse course. In fact, the universe’s expansion did slow down for the first several billion years following the Big Bang. Then, strangely, it began to speed up again. Astronomers attribute that outward push to dark energy.

But no one knows what dark energy actually is. One suggestion is that it might be a kind of energy associated with empty space known as the “cosmological constant,” an idea first proposed by Albert Einstein in 1917. But it’s also possible that, rather than being constant, the strength of dark energy’s push may have varied over the eons.

For theoretical physicist Marc Kamionkowski, the Hubble tension is an urgent problem. But he and his colleagues may have found a way forward — an idea called “early dark energy.” He and Adam Riess, both of Johns Hopkins University, explore the nature of the tension and the prospects for eventually mediating it in the 2023 Annual Review of Nuclear and Particle Science.

In 2021, Kamionkowski was awarded the Gruber Cosmology Prize, one of the field’s top honors, together with Uroš Seljak and Matias Zaldarriaga, for developing techniques for studying the cosmic microwave background. Though Kamionkowski spends much of his time working on problems in theoretical astrophysics, cosmology and particle physics, his diverse interests make him hard to pigeonhole. “My interests are eclectic and change from year to year,” he says.

This conversation has been edited for length and clarity.

In your paper, you talk about this idea of “early dark energy.” What is that?

With the Hubble tension, we have an expansion rate for the universe that we infer from interpreting the cosmic microwave background measurements, and we have an expansion rate that we infer more directly from supernova data — and they disagree.

And most solutions or explanations for this Hubble tension involve changes to the mathematical description of the components and evolution of the universe — the standard cosmological model. Most of the early efforts to understand the discrepancy involved changes to the late-time behavior of the universe as described by the standard cosmological model. But nearly all of those ideas don’t work, because they postulate very strange, new physics. And even if you’re willing to stomach these very unusual, exotic physics scenarios, they’re inconsistent with the data, because we have constraints based on observational data on the late expansion history of the universe that don’t match these scenarios.

So the other possibility is to change something about the model of the early history of the universe. And early dark energy is our first effort to do that. So early dark energy is a class of models in which the early expansion history of the universe is altered through the introduction of some new exotic component of matter that we call early dark energy.

“A component of matter” — is dark energy a type of matter?

It is a type of “matter,” but unlike any we experience in our daily lives. You could also call it a “fluid,” but again, it’s not like any fluids we have on Earth or in the solar system.

Dark energy appears to be pushing galaxies away from one another at an accelerating rate — would “early dark energy” add a new kind of dark energy to the mix?

Well, we don’t really know what dark energy is, and we don’t know what early dark energy is, so it’s hard to say whether they’re the same or different. However, the family of ideas we’ve developed for early dark energy are pretty much the same as those we’ve developed for dark energy but they are active at a different point in time.

The cosmological constant is the simplest hypothesis for something more broadly referred to as dark energy, which is some component of matter that has a negative pressure and the correct energy density required to account for the observations. And early dark energy is a different type of dark energy, in that it would become important in the early universe rather than the later universe.

Turning back to the Hubble tension: You said one measurement comes from the cosmic microwave background, and the other from supernova data. Tell me more about these two measurements.

The cosmic microwave background is the gas of “relic radiation” left over from the Big Bang. We have measured the fluctuations in the intensity, or temperature, of that cosmic microwave background across the entire sky. And by modeling the physics that gives rise to those fluctuations, we can infer a number of cosmological parameters (the numerical values for terms in the math of the standard cosmological model).

So we have these images of the cosmic microwave background, which look like images of noise — but the noise has certain characteristics that we can quantify. And our physical models allow us to predict the statistical characteristics of those cosmic microwave background fluctuations. And by fitting the observations to the models, we can work out various cosmological parameters, and the Hubble constant is one of them.

And the second method?

The Hubble constant can also be inferred from supernovae, which gives you a larger value. That’s a little more straightforward.… We infer the distances to these objects by seeing how bright they appear on the sky. Something that is farther away will be fainter than something that’s close. And then we also measure the velocity at which it’s moving away from us by detecting Doppler shifts in the frequencies of certain atomic transition lines. That gives us around 73 or 74 kilometers per second per megaparsec. The cosmic microwave background gives a value of about 68.

What’s the margin of error between the two measurements?

The discrepancy between the two results is five sigma, so 100,000-to-one odds against it being just a statistical fluctuation.

The first question that comes to mind would be, maybe one of the two approaches had some systematic error, or something was overlooked. But I’m sure people have spent months or years trying to see if that was the case.

There’s no simple, obvious mistake, in either case. The cosmic microwave background analyses are complicated, but straightforward. And many different people in many different groups have done these analyses, with different tools on different datasets. And that is a robust measurement. And then there’s the supernova results. And those have been scrutinized by many, many people, and there’s nothing obvious that’s come up that’s wrong with the measurement.

So just to recap: Data gleaned from the cosmic microwave background (CMB) radiation yield one value for the cosmological constant, while data obtained from supernovae give you another, somewhat higher value. So what’s going on? Is it possible there’s something about the CMB that we don’t understand, or something about supernovae that we’re wrong about?

Well, honestly, we have no idea what’s going on. One possibility is that there’s something in our interpretation of the cosmic microwave background measurements — the way it’s analyzed — that is missing. But again, a lot of people have been looking at this for a long time, and nothing obvious has come up.

Another possibility is that there is something missing in the interpretation of the supernova data; but again, a lot of people look at that, and nothing has come up. And so a third possibility is that there’s some new physics beyond what’s in our standard cosmological model.

Can you explain what the standard cosmological model is?

We have a mathematical model for the origin and evolution of the whole universe that is fit by five parameters — or six, depending on how you count — that we need to specify or fit to the data to account for all of the cosmological observations. And it works.

Contrast that with the model for the origin of the Earth, or the solar system. The Earth is a lot closer; we see it every day. We have a huge amount of information about the Earth. But we don’t have a mathematical model for its origin that is anywhere close to as simple and successful as the standard cosmological model. It’s a remarkable thing that we can talk about a mathematical physical model for the origin and evolution of the universe.

Why is this standard cosmological model called the “lambda CDM” model?

It’s a ridiculous name. We call it “lambda CDM,” where CDM stands for “cold dark matter” and the Greek letter lambda stands for the cosmological constant. But it’s just a ridiculous name because lambda and CDM are just two of the ingredients, and they’re not even the most crucial ingredients. It’s like naming a salad “salt-and-pepper salad” because you put salt and pepper in it.

What are the other ingredients?

One of the other ingredients in the model is that, of the three possible cosmological geometries — open, closed or flat — the universe is flat; that is, the geometry of spacetime, on average, obeys the rules of Euclidean plane geometry. And the critical feature of the model is that the primordial universe is very, very smooth, but with very small-amplitude ripples in the density of the universe that are consistent with those that would be produced from a period of inflation in the early universe.

Inflation — that’s the idea of cosmic inflation, a very brief period in the early universe when the universe expanded very rapidly?

Inflation is in some sense an idea for what set the Big Bang in motion. In the early 1980s, particle theorists realized that theories of elementary-particle physics allowed for the existence of a substance that in the very early universe could temporarily behave like a cosmological constant of huge amplitude. This substance would allow a brief period of superaccelerated cosmological expansion and thereby blow a tiny, pre-inflationary patch of the universe into the huge universe we see today. The idea implies that our universe today is flat, as it appears now to be, and was initially very smooth — as consistent with the smoothness of the CMB — and has primordial density fluctuations like those in the CMB that would then provide the seeds for the later growth of galaxies and clusters of galaxies.

So if early dark energy is real, it would add one more ingredient to the universe?

It is more ingredients. It’s the last thing you want to resort to. New physics should always be the last thing that you ever resort to. But most people, I think, would agree that it’s the least ridiculous of all the explanations for the Hubble tension. That’s kind of the word on the street.

What would early dark energy’s role have been in the early universe?

Its only job is to increase the total energy density of the universe, and therefore increase the expansion rate of the universe for a brief period of time — within the first, say, 100,000 years after the Big Bang.

Why does a higher energy density lead to a greater expansion rate?

This is difficult to understand intuitively. A higher energy density implies a stronger gravitational field which, in the context of an expanding universe, is manifest as a faster expansion rate. This is sort of analogous to what might arise in planetary dynamics: According to Newton’s laws, if the mass of the sun were larger, the velocity of the Earth in its orbit would be larger (leading to a shorter year).

And just so I’m following this: You mentioned the two approaches to measuring the Hubble constant; one from supernovas and one from the CMB. And this idea of early dark energy allows you to interpret the CMB data in a slightly different manner, so that you come up with a slightly different value for the Hubble constant — one which more closely matches the supernova value. Right?

That is correct.

What kind of tests would have to be done to see if this approach is correct?

That’s pretty straightforward, and we’re making progress on it. The basic idea is that the early dark energy models are constructed to fit the data that we have. But the predictions that they make for data that we might not yet have can differ from lambda CDM. And in particular, we have measured the fluctuations of the cosmic microwave background. But we’ve imaged the cosmic microwave background with some finite angular resolution, which has been a fraction of a degree. With Planck, the satellite launched by the European Space Agency in 2009, it was about five arc-minute resolution — equivalent to one-sixth of the apparent width of a full moon.

Over the past decade, we’ve had experiments like ACT, which stands for Atacama Cosmology Telescope, and SPT, which stands for South Pole Telescope. These are two competing state-of-the-art cosmic microwave background experiments that have been ongoing for about the past decade, and keep improving. And they’re mapping the cosmic microwave background with better angular resolution, allowing us to see more features that we weren’t able to access with Planck. And the early dark energy models make predictions for these very small angular scale features that we’re now beginning to resolve and that differ from the predictions of lambda CDM. That suggests the possibility of new physics.

In the next few years, we expect to have data from the Simons Observatory, and on a decade timescale we expect to have new data from CMB-S4, this big US National Science Foundation and Department of Energy project. And so, if there’s a problem with lambda CDM, if there’s something different in the early expansion history of the universe beyond lambda CDM, the hope is that we’ll see it in there.

Is there evidence that could conceivably come from particle physics that would help you decide if early dark energy is on the right track?

In principle, someday we will have a theory for fundamental physics that unifies quantum gravity with this broad understanding of strong, weak and electromagnetic interactions. And so someday we might have a model that does that and says, look, there’s this additional new scalar field lying around that’s going to have exactly the properties that you need for early dark energy. So in principle, that could happen; in practice, we’re not getting a whole lot of guidance from that direction.

What’s next for you and your colleagues?

My personal interest in theoretical cosmology and astrophysics was really eclectic, and I kind of bounced around from one thing to another. My collaborators on the early dark energy paper, they’ve been very, very focused on continuing to construct and explore different types of early dark energy models. But it has become an endeavor of the community as a whole.

So there are lots of people, theorists, now all over the place, thinking about detailed models for early dark energy, following through with the detailed predictions of those models, and detailed comparisons of those predictions with measurements, as they become available. It’s not my personal top priority day-to-day in my research. But it is the top priority for many of the collaborators I had on the original work, and it’s definitely a top priority for many, many people in the community.

As I said, nobody thinks early dark energy is a great idea. But everybody agrees that it’s the least crazy idea — the most palatable of all the crazy models to explain the Hubble tension.

10.1146/knowable-092823-1

Dan Falk (@danfalk) is a science journalist based in Toronto. His books include The Science of Shakespeare: A New Look at the Playwright’s Universe and In Search of Time: The History, Physics, and Philosophy of Time.

This article originally appeared in Knowable Magazine, an independent journalistic endeavor from Annual Reviews.

Right before exploding, this star puffed out a sun's worth of mass

Keith Cooper

SPACE.COM

Thu, September 28, 2023

Right before exploding, this star puffed out a sun's worth of mass

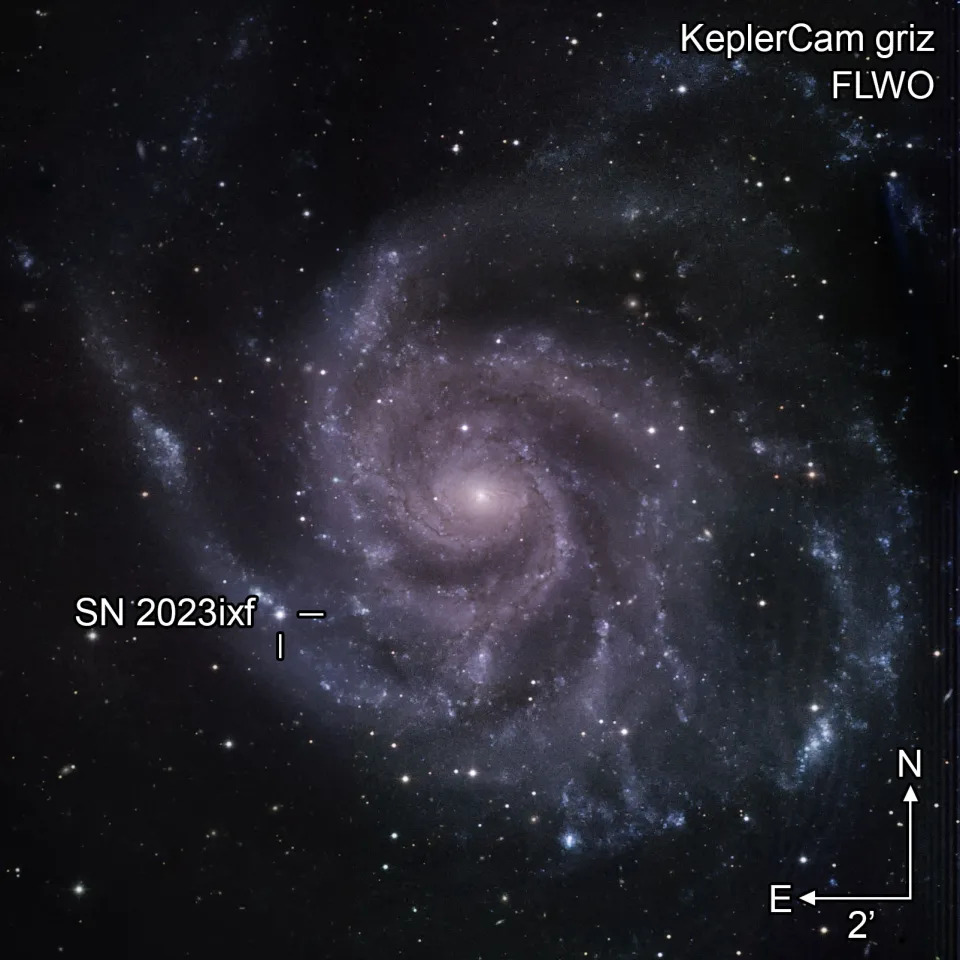

A massive star that exploded in the Pinwheel Galaxy in May appears to have unexpectedly lost approximately one sun's worth of ejected mass during the final years of its life before going supernova, new observations have shown. This discovery reveals more about the enigmatic end days of massive stars.

On the night of May 19, Japanese amateur astronomer Kōichi Itagaki was conducting his regular supernova sweep using telescopes based in three remote observatories dotted around the country. They were located, for instance, in Yamagata, Okayama and on the island of Shikoku.

Amateur astronomers have a long history of discovering exploding stars before the professionals spot them: Itagaki has raked in over 170, just beating out UK amateur astronomer Tom Boles’ tally of more than 150. When Itagaki spotted the light of SN 2023ixf, however, he immediately knew he'd found something special. That’s because this star had exploded in the nearby Pinwheel Galaxy (Messier 101), which is just 20 million light-years away in the constellation of Ursa Major, the Great Bear. Cosmically speaking, that's pretty close.

Related: See new supernova shine bright in stunning Pinwheel Galaxy photo

Soon enough, amateur astronomers around the world started gazing at SN 2023ixf because the Pinwheel in general is a popular galaxy to observe. However, haste is key when it comes to supernova observations: Astronomers are keen to understand exactly what is happening in the moments immediately after a star goes supernova. Yet all too often, a supernova is spotted several days after the explosion took place, so they don’t get to see its earliest stages.

Considering how close, relatively speaking, SN 2023ixf was to us and how early it was identified, it was a prime candidate for close study.

Itagaki sprang into action.

"I received an urgent e-mail from Kōichi Itagaki as soon as he discovered SN 2023ixf," said postgraduate student Daichi Hiramatsu of the Harvard–Smithsonian Center for Astrophysics (CfA) in a statement.

Thu, September 28, 2023

Right before exploding, this star puffed out a sun's worth of mass

A massive star that exploded in the Pinwheel Galaxy in May appears to have unexpectedly lost approximately one sun's worth of ejected mass during the final years of its life before going supernova, new observations have shown. This discovery reveals more about the enigmatic end days of massive stars.

On the night of May 19, Japanese amateur astronomer Kōichi Itagaki was conducting his regular supernova sweep using telescopes based in three remote observatories dotted around the country. They were located, for instance, in Yamagata, Okayama and on the island of Shikoku.

Amateur astronomers have a long history of discovering exploding stars before the professionals spot them: Itagaki has raked in over 170, just beating out UK amateur astronomer Tom Boles’ tally of more than 150. When Itagaki spotted the light of SN 2023ixf, however, he immediately knew he'd found something special. That’s because this star had exploded in the nearby Pinwheel Galaxy (Messier 101), which is just 20 million light-years away in the constellation of Ursa Major, the Great Bear. Cosmically speaking, that's pretty close.

Related: See new supernova shine bright in stunning Pinwheel Galaxy photo

Soon enough, amateur astronomers around the world started gazing at SN 2023ixf because the Pinwheel in general is a popular galaxy to observe. However, haste is key when it comes to supernova observations: Astronomers are keen to understand exactly what is happening in the moments immediately after a star goes supernova. Yet all too often, a supernova is spotted several days after the explosion took place, so they don’t get to see its earliest stages.

Considering how close, relatively speaking, SN 2023ixf was to us and how early it was identified, it was a prime candidate for close study.

Itagaki sprang into action.

"I received an urgent e-mail from Kōichi Itagaki as soon as he discovered SN 2023ixf," said postgraduate student Daichi Hiramatsu of the Harvard–Smithsonian Center for Astrophysics (CfA) in a statement.

The race to decode a supernova

Alerted to the supernova, Hiramatsu and colleagues immediately followed-up with several professional telescopes at their disposal including the 6.5-meter Multi Mirror Telescope (MMT) at the Fred Lawrence Whipple Observatory on Mount Hopkins in Arizona. They measured the supernova's light spectrum, and how that light changed over the coming days and weeks. When plotted on a graph, this kind of data forms a "light curve."

The spectrum from SN 2023ixf showed that it was a type II supernova — a category of supernova explosion involving a star with more than eight times the mass of the sun. In the case of SN 2023ixf, searches in archival images of the Pinwheel suggested the exploded star may have had a mass between 8 and 10 times that of our sun. The spectrum was also very red, indicating the presence of lots of dust near the supernova that absorbed bluer wavelengths but let redder wavelengths pass. This was all fairly typical, but what was especially extraordinary was the shape of the light curve.

Normally, a type II supernova experiences what astronomers call a 'shock breakout' very early in the supernova's evolution, as the blast wave expands outwards from the interior of the star and breaks through the star's surface. Yet a bump in the light curve from the usual flash of light stemming from this shock breakout was missing. It didn’t turn up for several days. Was this a supernova in slow motion, or was something else afoot?

"The delayed shock breakout is direct evidence for the presence of dense material from recent mass loss," said Hiramatsu. "Our new observations revealed a significant and unexpected amount of mass loss — close to the mass of the sun — in the final year prior to explosion."

Imagine, if you will, an unstable star puffing off huge amounts of material from its surface. This creates a dusty cloud of ejected stellar material all around the doomed star. The supernova shock wave therefore not only has to break out through the star, blowing it apart, but also has to pass through all this ejected material before it becomes visible. Seemingly, this took several days for the supernova in question.

Massive stars often shed mass — just look at Betelgeuse’s shenanigans over late 2019 and early 2020, when it belched out a cloud of matter with ten times the mass of Earth’s moon that blocked some of Betelgeuse’s light, causing it to appear dim. However, Betelgeuse isn’t ready to go supernova just yet, and by the time it does, the ejected cloud will have moved far enough away from the star for the shock breakout to be immediately visible. In the case of SN 2023ixf, the ejected material was still very close to the star, meaning that it had only recently been ejected, and astronomers were not expecting that.

Hiramatsu’s supervisor at the CfA, Edo Berger, was able to observe SN 2023ixf with the Submillimeter Array on Mauna Kea in Hawaii, which sees the universe at long wavelengths. He was able to see the collision between the supernova shockwave and the circumstellar cloud.

"The only way to understand how massive stars behave in the final years of their lives up to the point of explosion is to discover supernovae when they are very young, and preferably nearby, and then to study them across multiple wavelengths," said Berger. "Using both optical and millimeter telescopes we effectively turned SN 2023ixf into a time machine to reconstruct what its progenitor star was doing up to the moment of its death."

The question then becomes, what caused the instability?

Stars, they're just like onions

We can think of an evolved massive star as being like an onion, with different layers. Each layer is made from a different element, produced by sequential nuclear burning in the star's respective layers as the stellar object ages and its core contracts and grows hotter. The outermost layer is hydrogen, then you get to helium. Then, you go through carbon, oxygen, neon and magnesium in succession until you reach all the way to silicon in the core. That silicon is able to undergo nuclear fusion reactions to form iron, and this is where nuclear fusion in a massive star’s core stops — iron requires more energy to be put into the reaction than comes out of it, which is not efficient for the star.

Thus the core switches off, the star collapses onto it and then rebounds and explodes outwards.

Related Stories:

— Hundreds of supernova remnants remain hidden in our galaxy. These astronomers want to find them

— 1st black hole imaged by humanity is confirmed to be spinning, study finds

— Black holes keep 'burping up' stars they destroyed years earlier, and astronomers don't know why

One possibility is that the final stages of burning high-mass elements inside the star, such as silicon (which is used up in the space of about a day), is disruptive, causing pulses of energy that shudder through the star and lift material off its surface. It's certainly something that astronomers will look for in the future, now that they’ve been able to see it in a relatively close supernova.

What the story of SN 2023ixf does tell us is, at the very least, that despite all the professional surveys hunting for transient objects like supernovas, amateur astronomers can still make a difference.

"Without … Itagaki’s work and dedication, we would have missed the opportunity to gain critical understanding of the evolution of massive stars and their supernova explosions," said Hiramatsu.

In recognition of his work Itagaki, who continued to make observations of the supernova that were of use to the CfA team, is listed as an author on the paper describing their results. That paper was published on Sept. 19 in The Astrophysical Journal Letters.

No comments:

Post a Comment