IMAGE: ECOLOGICAL RECONSTRUCTION OF GIANT RHINOS AND THEIR ACCOMPANYING FAUNA IN THE LINXIA BASIN DURING THE OLIGOCENE view more

CREDIT: CHEN YU

The giant rhino, Paraceratherium, is considered the largest land mammal that ever lived and was mainly found in Asia, especially China, Mongolia, Kazakhstan, and Pakistan. How this genus dispersed across Asia was long a mystery, however. A new discovery has now shed light on this process.

Prof. DENG Tao from the Institute of Vertebrate Paleontology and Paleoanthropology (IVPP) of the Chinese Academy of Sciences and his collaborators from China and the U.S.A. recently reported a new species Paraceratherium linxiaense sp. nov., which offers important clues to the dispersal of giant rhinos across Asia.

The study was published in Communications Biology on June 17.

The new species' fossils comprise a completely preserved skull and mandible with their associated atlas, as well as an axis and two thoracic vertebrae from another individual. The fossils were recovered from the Late Oligocene deposits of the Linxia Basin in Gansu Province, China, which is located on the northeastern border of the Tibetan Plateau.

Phylogenetic analysis yielded a single most parsimonious tree, which places P. linxiaense as a derived giant rhino, within the monophyletic clade of the Oligocene Asian Paraceratherium. Within the Paraceratherium clade, the researchers' phylogenetic analysis produced a series of progressively more-derived species--from P. grangeri, through P. huangheense, P. asiaticum, and P. bugtiense--finally terminating in P. lepidum and P. linxiaense. P. linxiaense is at a high level of specialization, similar to P. lepidum, and both are derived from P. bugtiense.

Adaptation of the atlas and axis to the large body and long neck of the giant rhino already characterized P. grangeri and P. bugtiense, and was further developed in P. linxiaense, whose atlas is elongated, indicative of a long neck and higher axis with a nearly horizontal position for its posterior articular face. These features are correlated with a more flexible neck.

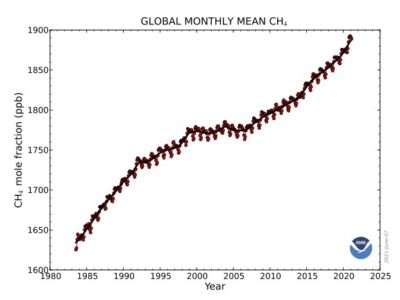

The giant rhino of western Pakistan is from the Oligocene strata, representing a single species, Paraceratherium bugtiense. On the other hand, the rest of the genus Paraceratherium, which is distributed across the Mongolian Plateau, northwestern China, and the area north of the Tibetan Plateau to Kazakhstan, is highly diversified.

The researchers found that all six species of Paraceratherium are sisters to Aralotherium and form a monophyletic clade in which P. grangeri is the most primitive, succeeded by P. huangheense and P. asiaticum.

The researchers were thus able to determine that, in the Early Oligocene, P. asiaticum dispersed westward to Kazakhstan and its descendant lineage expanded to South Asia as P. bugtiense. In the Late Oligocene, Paraceratherium returned northward, crossing the Tibetan area to produce P. lepidium to the west in Kazakhstan and P. linxiaense to the east in the Linxia Basin.

The researchers noted the aridity of the Early Oligocene in Central Asia at a time when South Asia was relatively moist, with a mosaic of forested and open landscapes. "Late Oligocene tropical conditions allowed the giant rhino to return northward to Central Asia, implying that the Tibetan region was still not uplifted as a high-elevation plateau," said Prof. DENG.

During the Oligocene, the giant rhino could obviously disperse freely from the Mongolian Plateau to South Asia along the eastern coast of the Tethys Ocean and perhaps through Tibet. The topographical possibility that the giant rhino crossed the Tibetan area to reach the Indian-Pakistani subcontinent in the Oligocene can also be supported by other evidence.

Up to the Late Oligocene, the evolution and migration from P. bugtiense to P. linxiaense and P. lepidum show that the "Tibetan Plateau" was not yet a barrier to the movement of the largest land mammal.

CAPTION

Holotype of Paraceratherium linxiaense sp. nov. Skull and mandible share the scale bar, but both the anterior and nuchal views have an independent scale bar.

CREDIT

IVPP

This research was supported by the Chinese Academy of Sciences, the National Natural Science Foundation of China, and the Second Comprehensive Scientific Expedition on the Tibetan Plateau.

CAPTION

Distribution and migration of Paraceratherium in the Oligocene Eurasia. Localities of the early Oligocene species were marked by the yellow color, and the red indicates the late Oligocene species.

CREDIT

IVPP