AIR-Act2Act: A dataset for training social robots to interact with the elderly

To interact with humans and assist them in their day-to-day life, robots should have both verbal and non-verbal communication capabilities. In other words, they should be able to understand both what a user is saying and what their behavior indicates, adapting their speech, behavior and actions accordingly.

To teach social robots to interact with humans, roboticists need to train them on datasets containing human-human verbal and non-verbal interactions. Compiling these datasets can be quite time consuming, hence are currently fairly scarce and are not always suitable for training robots to interact with specific segments of the population, such as children or the elderly.

To facilitate the development of robots that can best assist the elderly, researchers at the Electronics and Telecommunications Research Institute (ETRI) in South Korea recently created AIR-Act2Act, a dataset that can be used to teach robots non-verbal social behaviors. The new dataset was compiled as part of a broader project called AIR (AI for Robots), aimed at developing robots that can help older adults throughout their daily activities.

"Social robots can be great companions for lonely elderly people," Woo-Ri Ko, one of the researchers who carried out the study, told TechXplore. "To do this, however, robots should be able to understand the behavior of the elderly, infer their intentions, and respond appropriately. Machine learning is one way to implement this intelligence. Since it provides the ability to learn and improve automatically from experience, it can also allow robots to learn social skills by observing natural interactions between humans."

Ko and her colleagues were the first to record interactions between younger and older (i.e., senior) adults with the purpose of training social robots. The dataset they compiled contains over 5000 interactions, each with associated depth maps, body indexes and 3-D skeletal data of the interacting individuals.

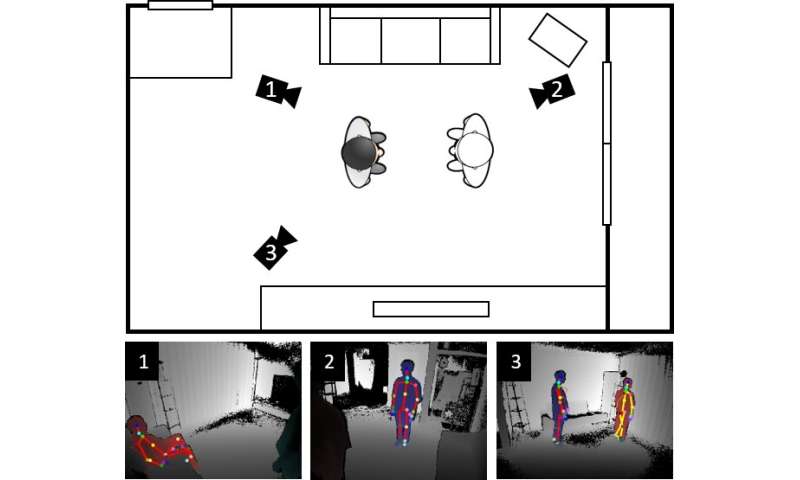

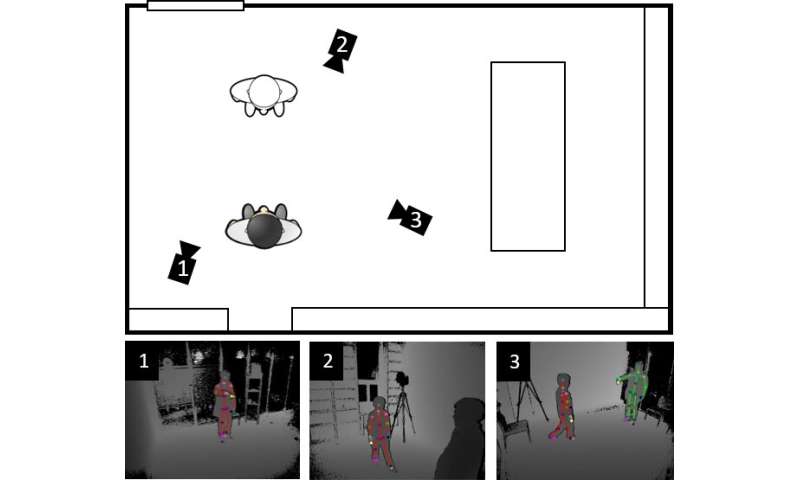

"AIR-Act2Act dataset is the only dataset up to date that specifically contains interactions with the elderly," Ko said. "We recruited 100 elderly people and two college students to perform 10 interactions in indoor environments and recorded data during these interactions. We also captured depth maps, body indexes and 3-D skeletal data of participants as they interacted with each other, using three Microsoft Kinect v2 cameras."

At a later stage, the researchers manually analyzed and refined the skeletal data they collected to identify instances in which the Kinect sensor did not track movements properly. This incorrect data was then adjusted or removed from the dataset.

Unlike other existing datasets for training social robots, AIR-Act2Act also contains representations of the movements that should be emulated or learned by a robot. More specifically, Ko and her colleagues calculated the actions that a humanoid robot called NAO would need to perform based on its joint angles to emulate the non-verbal behavior of human participants interacting in their data samples.

"Previous research used human-human interaction datasets to generate two social behaviors: handshakes and waiting," Ko said. "However, larger datasets were essential to generate more diverse behavior. We hope that our large-scale dataset will help advance this study further and promote related research."

Ko and her colleagues published AIR-Act2Act on GitHub, along with a series of useful python scripts, so it can now be easily accessed by other developers worldwide. In the future, their dataset could enable the development of more advanced and responsive humanoid robots for assisting the elderly that would be able to reproduce human non-verbal social behaviors.

"We are now conducting research exploring end-to-end learning-based social behavior generation using our dataset," Ko said. "We have already achieved promising results, which will be presented at the SMC 2020 conference. In the future, we plan to further expand on this research."

© 2020 Science X Network

No comments:

Post a Comment