By Amber Jacobs and Will Jackson

Posted Sun 21 Jan 2024

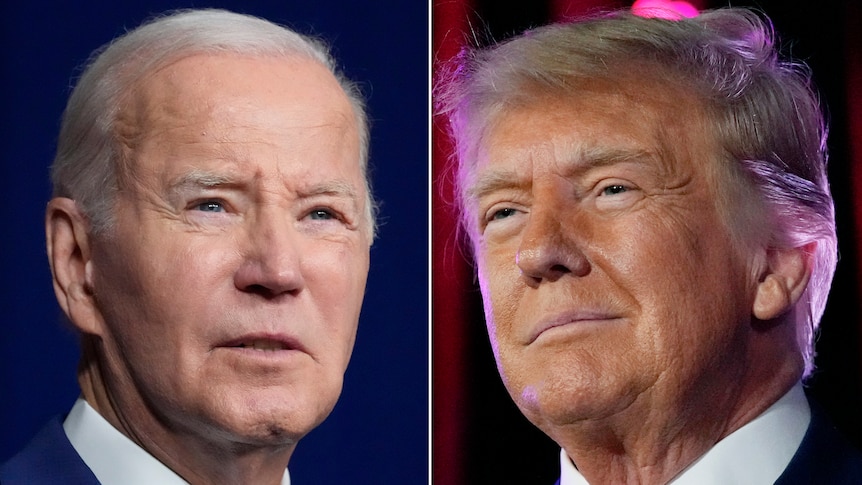

Political campaigners all over the world have begun to experiment with using generative AI.

(ABC News)

abc.net.au

Divyendra Singh Jadoun, an artificial intelligence (AI) expert based Rajasthan in northern India, gets asked to do two kinds of jobs for political campaigns.

"One is to enhance the image of their existing politician that they are endorsing," he told the ABC, "and the second one is to harm the image of the opponent party."

Mr Jadoun mostly uses AI tools to translate and dub content, something that's crucial in a country as linguistically diverse as India.

But recently, he has had to turn down some more controversial requests.

Divyendra Singh Jadoun has had to turn down requests to use AI to produce political misinformation. (Supplied)

"One is the deepfake video where we swap the face of one person over to the other," he said.

"There were requests that you had to swap the face of the opponent party leader [onto] someone who is a look-alike of him and is doing something like drinking.

"And the second are the audio deepfakes to clone the voice of the person for harming [their] reputation ... by spreading misinformation, saying this is a leaked call from the politician."

A record number of people globally are heading to the polls this year — with elections to be held in about 50 countries including the world's biggest democracies in India, the United States, and Indonesia.

And with easy-to-use AI tools that give users the ability to create and manipulate realistic looking fake images, video and audio, experts warn the world is likely to see a "tsunami of disinformation".

Misinformation rated globe's top risk

A report prepared for the World Economic Forum in Davos earlier this month found that misinformation, super-charged with artificial intelligence, was the top risk facing the globe in 2024.

It came after a year in which political campaigners around the world began to experiment with possible uses of new generative AI tools like ChatGPT, Midjourney and Stable Diffusion.

The Republican Party in the US made headlines in April with a video response released after President Joe Biden announced he would run again.

Titled Beat Biden it depicted a dystopian future under a Biden administration and used imagery generated entirely using AI.

Donald Trump's Republican Party rival Ron DeSantis also released a video that reportedly included fake images of Mr Trump hugging former White House chief medical adviser Anthony Fauci.

YOUTUBEThe US Republican Party published an attack ad using AI-generated imagery.

In Argentina, image-generation tools were used in the lead-up to October's presidential elections to create campaign material to flood social media and as physical posters.

They included images created by the campaign for candidate Sergio Massa depicting him in a variety of inspirational styles and scenarios, including riding a lion.

Supporters of the eventual winner, Javier Milei, used generative AI extensively as well, for example portraying Mr Massa as a Mao Zedong style-dictator and Mr Milei himself as a cartoon lion.

According to the Financial Times, audio deepfakes and AI-generated video were used in attempts to discredit Bangladesh's opposition in the lead-up to the country's national elections on January 7 this year.

New apps have 'democratised' AI

Darrell West, a senior fellow with the Brookings Institution's Centre for Technology Innovation in the United States, said the new applications had "democratised" AI.

"Generative AI brings very powerful AI algorithms down to the level of the ordinary person," he said.

"It used to be if you wanted to use AI, you had to have a pretty technical background and sophisticated knowledge.

"Now, because AI is prompt-driven, and template driven, anybody can use it.

"So we have democratised the technology, but it's in the hands of anyone and so we're likely to see a tsunami of disinformation in the upcoming elections."

Experts warn of AI's impact on 2024 US election as deepfakes go mainstream

Researchers say bogus claims will likely worsen during the next US election, as artificial intelligence becomes stronger and more accessible.

"One is to enhance the image of their existing politician that they are endorsing," he told the ABC, "and the second one is to harm the image of the opponent party."

Mr Jadoun mostly uses AI tools to translate and dub content, something that's crucial in a country as linguistically diverse as India.

But recently, he has had to turn down some more controversial requests.

Divyendra Singh Jadoun has had to turn down requests to use AI to produce political misinformation. (Supplied)

"One is the deepfake video where we swap the face of one person over to the other," he said.

"There were requests that you had to swap the face of the opponent party leader [onto] someone who is a look-alike of him and is doing something like drinking.

"And the second are the audio deepfakes to clone the voice of the person for harming [their] reputation ... by spreading misinformation, saying this is a leaked call from the politician."

A record number of people globally are heading to the polls this year — with elections to be held in about 50 countries including the world's biggest democracies in India, the United States, and Indonesia.

And with easy-to-use AI tools that give users the ability to create and manipulate realistic looking fake images, video and audio, experts warn the world is likely to see a "tsunami of disinformation".

Misinformation rated globe's top risk

A report prepared for the World Economic Forum in Davos earlier this month found that misinformation, super-charged with artificial intelligence, was the top risk facing the globe in 2024.

It came after a year in which political campaigners around the world began to experiment with possible uses of new generative AI tools like ChatGPT, Midjourney and Stable Diffusion.

The Republican Party in the US made headlines in April with a video response released after President Joe Biden announced he would run again.

Titled Beat Biden it depicted a dystopian future under a Biden administration and used imagery generated entirely using AI.

Donald Trump's Republican Party rival Ron DeSantis also released a video that reportedly included fake images of Mr Trump hugging former White House chief medical adviser Anthony Fauci.

YOUTUBEThe US Republican Party published an attack ad using AI-generated imagery.

In Argentina, image-generation tools were used in the lead-up to October's presidential elections to create campaign material to flood social media and as physical posters.

They included images created by the campaign for candidate Sergio Massa depicting him in a variety of inspirational styles and scenarios, including riding a lion.

Supporters of the eventual winner, Javier Milei, used generative AI extensively as well, for example portraying Mr Massa as a Mao Zedong style-dictator and Mr Milei himself as a cartoon lion.

According to the Financial Times, audio deepfakes and AI-generated video were used in attempts to discredit Bangladesh's opposition in the lead-up to the country's national elections on January 7 this year.

New apps have 'democratised' AI

Darrell West, a senior fellow with the Brookings Institution's Centre for Technology Innovation in the United States, said the new applications had "democratised" AI.

"Generative AI brings very powerful AI algorithms down to the level of the ordinary person," he said.

"It used to be if you wanted to use AI, you had to have a pretty technical background and sophisticated knowledge.

"Now, because AI is prompt-driven, and template driven, anybody can use it.

"So we have democratised the technology, but it's in the hands of anyone and so we're likely to see a tsunami of disinformation in the upcoming elections."

Experts warn of AI's impact on 2024 US election as deepfakes go mainstream

Researchers say bogus claims will likely worsen during the next US election, as artificial intelligence becomes stronger and more accessible.

Read more

Mr West said AI could also be used during the administrative process of elections and weaponised to target swing voters.

“It's very easy to use AI to target very small groups of people," Mr West said.

"In most elections there is a relatively small undecided vote. What it can do is to identify [those] undecided voters, what are the issues or causes that they care about and develop targeted message designed to persuade those individuals.

"People can [also] use AI to tell people to go to the wrong polling place and show up on the wrong day.

"There are some states in the US where they're using AI to clean up their voting rolls, but it means that some legitimate voters are getting kicked off the ballot and so they're not being able to vote.”

And it's not just political campaigns using AI against each other — Mr West warned it could be a powerful tool for foreign actors

In December, Facebook owner Meta was forced to removed thousands of accounts based in China pretending to be Americans and posting about inflammatory issues like abortion.

And prior to Taiwan's recent election, the ruling Democratic Progressive Party accused Beijing of mounting a massive misinformation campaign in an attempt to influence the outcome.

"Foreign entities have a stake in many of the major elections [that] are going to take place in the next year in the United States, in India and Indonesia, and in the European Union," he said.

"In the United States for example, Russia, China, Iran, North Korea, the Saudis, the UAE and a number of other countries have a stake in this election."

AI being used ahead of Indonesian election

Ahead of Indonesia's presidential elections on February 14, AI content has been a big part of the three candidates' campaign strategies.

The Golkar party controversially produced an AI-generated video of its former leader, dictator Suharto who died in in 2008, delivering an entirely new speech.

"I am president Suharto, the second president of Indonesia, inviting you to elect representatives of the people from Golkar," the AI Suharto says.

The Prabowo Subianto-Gibran Rakabuming Raka team also admitted to using AI in a video promoting its policy to provide free milk for elementary school pupils, to get around rules against using children in election advertising.

Nuurrianti Jalli, an assistant professor at Oklahoma State University's School of Media and Strategic Communications, said AI was not just being used by the official campaigns.

"In Indonesia, AI is increasingly employed not just as a tool for political campaigns aimed at reaching voters more effectively, but it’s also being harnessed by individuals to create electoral content," Dr Jalli said.

"This dual application of AI reflects its growing significance in the political landscape, both as a campaigning instrument and a means for citizens to participate in the political discourse."

Nuurrianti Jalli is an assistant professor at Oklahoma State University's School of Media and Strategic Communications.(Supplied)

Dr Jalli pointed to examples of widely-shared misinformation including a video which purported to show presidential candidate Prabowo Subianto delivering a speech in fluent Arabic that went viral on TikTok, a face-swap photo of Ganjar Pranowo with a porn actress and a digitally altered photo of Anies Baswedan wearing the raiments of a Catholic priest.

However, she said it was too soon to assess how much impact the latest AI tech was having on Indonesian voters.

"However, the rather active participation in the comment sections of these materials suggests a high level of public engagement and interest," she said.

"This also suggests that these contents could potentially shape public opinion.

"If utilised effectively, as seen in previous elections with the use of buzzers, memes, and various forms of social media propaganda, latest AI technology and its content could have a notable impact on the Indonesia election results."

Inside the world of Indonesia's election 'buzzers'

There's a growing army of "buzzers" threatening democracy in the lead-up to Indonesia's 2024 elections.

Mr West said AI could also be used during the administrative process of elections and weaponised to target swing voters.

“It's very easy to use AI to target very small groups of people," Mr West said.

"In most elections there is a relatively small undecided vote. What it can do is to identify [those] undecided voters, what are the issues or causes that they care about and develop targeted message designed to persuade those individuals.

"People can [also] use AI to tell people to go to the wrong polling place and show up on the wrong day.

"There are some states in the US where they're using AI to clean up their voting rolls, but it means that some legitimate voters are getting kicked off the ballot and so they're not being able to vote.”

And it's not just political campaigns using AI against each other — Mr West warned it could be a powerful tool for foreign actors

In December, Facebook owner Meta was forced to removed thousands of accounts based in China pretending to be Americans and posting about inflammatory issues like abortion.

And prior to Taiwan's recent election, the ruling Democratic Progressive Party accused Beijing of mounting a massive misinformation campaign in an attempt to influence the outcome.

"Foreign entities have a stake in many of the major elections [that] are going to take place in the next year in the United States, in India and Indonesia, and in the European Union," he said.

"In the United States for example, Russia, China, Iran, North Korea, the Saudis, the UAE and a number of other countries have a stake in this election."

AI being used ahead of Indonesian election

Ahead of Indonesia's presidential elections on February 14, AI content has been a big part of the three candidates' campaign strategies.

The Golkar party controversially produced an AI-generated video of its former leader, dictator Suharto who died in in 2008, delivering an entirely new speech.

"I am president Suharto, the second president of Indonesia, inviting you to elect representatives of the people from Golkar," the AI Suharto says.

The Prabowo Subianto-Gibran Rakabuming Raka team also admitted to using AI in a video promoting its policy to provide free milk for elementary school pupils, to get around rules against using children in election advertising.

Nuurrianti Jalli, an assistant professor at Oklahoma State University's School of Media and Strategic Communications, said AI was not just being used by the official campaigns.

"In Indonesia, AI is increasingly employed not just as a tool for political campaigns aimed at reaching voters more effectively, but it’s also being harnessed by individuals to create electoral content," Dr Jalli said.

"This dual application of AI reflects its growing significance in the political landscape, both as a campaigning instrument and a means for citizens to participate in the political discourse."

Nuurrianti Jalli is an assistant professor at Oklahoma State University's School of Media and Strategic Communications.(Supplied)

Dr Jalli pointed to examples of widely-shared misinformation including a video which purported to show presidential candidate Prabowo Subianto delivering a speech in fluent Arabic that went viral on TikTok, a face-swap photo of Ganjar Pranowo with a porn actress and a digitally altered photo of Anies Baswedan wearing the raiments of a Catholic priest.

However, she said it was too soon to assess how much impact the latest AI tech was having on Indonesian voters.

"However, the rather active participation in the comment sections of these materials suggests a high level of public engagement and interest," she said.

"This also suggests that these contents could potentially shape public opinion.

"If utilised effectively, as seen in previous elections with the use of buzzers, memes, and various forms of social media propaganda, latest AI technology and its content could have a notable impact on the Indonesia election results."

Inside the world of Indonesia's election 'buzzers'

There's a growing army of "buzzers" threatening democracy in the lead-up to Indonesia's 2024 elections.

Dr Jalli said tech companies should invest in AI algorithms and content moderation — in collaboration with local experts and informed by local nuances and context — to detect and counteract misinformation, while governments should create clear policies on the issues.

Civil society organisations needed to hold platforms and governments accountable and promote media information and literacy, she added.

"Users also play a part by critically evaluating the content they encounter and not to be highly dependent on AI tools," she said.

"I feel like the issue at the moment is how users of AI tend to place high trust in AI tools without realising its existing bias and potential misinformation."

Social media platforms are often used to spread misinformation. (Reuters)

Building resilient democracies

The developers of some AI apps and social media platforms have been taking steps to limit the harm their products can cause to democracy.

Facebook owner Meta in November last year announced it was barring political campaigns and advertisers in other regulated industries from using its new generative AI advertising products.

Then last week ChatGPT developer OpenAI announced a range of measures to ensure that its systems are "built, deployed, and used safely" including preventing them from being used for political campaigning and lobbying.

They also banned their systems from being used to deter people from participation in democratic processes.

"As we prepare for elections in 2024 across the world’s largest democracies, our approach is to continue our platform safety work by elevating accurate voting information, enforcing measured policies, and improving transparency," the company said in a blog post.

AI expert Aviv Ovadya is a researcher at the Berkman Klein Center for Internet and Society at Harvard.(Supplied)

However, AI expert Aviv Ovadya, a researcher at the Berkman Klein Center for Internet and Society at Harvard, said that to some extent the AI genie was out of the bottle.

"We are in a challenging position because not only do we now have tools that can be abused to accelerate disinformation, manipulation, and polarisation, but many of those tools are now completely outside of any government's control," Mr Ovadya told the ABC.

"While deepfake images and videos cause confusion and worse, what is even scarier is the way AI-driven text and voice software can essentially mimic talking to a real human — one which can take its time to build trust and influence.

"Many of the building blocks for this are now open source, so it's just a matter of time before they are significantly abused, and there is little that can be done by AI developers at that point; but if we act now, we might be able to ensure the next wave of AI is harder to weaponise.

"We need to be thinking about how to make society resilient to all of these sorts of attacks — not just preventing the technology from being developed at all."

Western Sydney University has utilised a citizens' assembly process to empower students.(Supplied)

Mr Ovadya said social media platforms should optimise their algorithms to promote "bridging" content that brings people together instead of dividing them, and implement authenticity and provenance infrastructure.

"The biggest thing one can do to make democracy resilient to AI however, is to improve democracy itself," he said.

He suggested alternative approaches such as "citizen assemblies" would make democracies less adversarial and be more resistant to misinformation.

"While Australia has not had a national citizens assembly yet, I've been very impressed by the work across Australia by organisations like newDemocracy and Mosaic Lab to run citizens' assemblies at the state and local level," he said.

"I am increasingly concerned that if we don't move more of the most polarising decisions to such processes, we won't be ready with more resilient forms of democracy in time for the impacts of AI — and perhaps it's already too late."

Posted 21 Jan 2024

No comments:

Post a Comment