Breaking Rust: AI artist tops US chart for first time as study reveals alarming recognition stats

An AI-generated music persona has topped the US Billboard charts for the first time, at the same time as a “first-of-its-kind" study from French streaming service Deezer reveals that 97 per cent of people “can’t tell the difference” between real music and AI-generated music.

It’s a first, and not one worth celebrating.

A song generated by artificial intelligence has topped the charts in the US for the first time, as a country “artist” named Breaking Rust has landed the Number 1 spot on Billboard’s Country Digital Song Sales chart.

The viral track, ‘Walk My Walk’, has over 3.5 million streams on Spotify - a platform on which “he” is a verified artist and which has prior form when it comes to giving AI-generated bands a platform.

Other Breaking Rust songs like ‘Livin’ on Borrowed Time’ and ‘Whiskey Don’t Talk Back’ have amassed more than 4 million and 1 million streams respectively. And if you just cringed your way into a mild aneurysm when reading that last song title, you’re only human.

Not all that much is known about Breaking Rust, apart from “his” nearly 43,000 followers on Instagram and a Linktree bio that reads: “Music for the fighters and the dreamers.”

How profound.

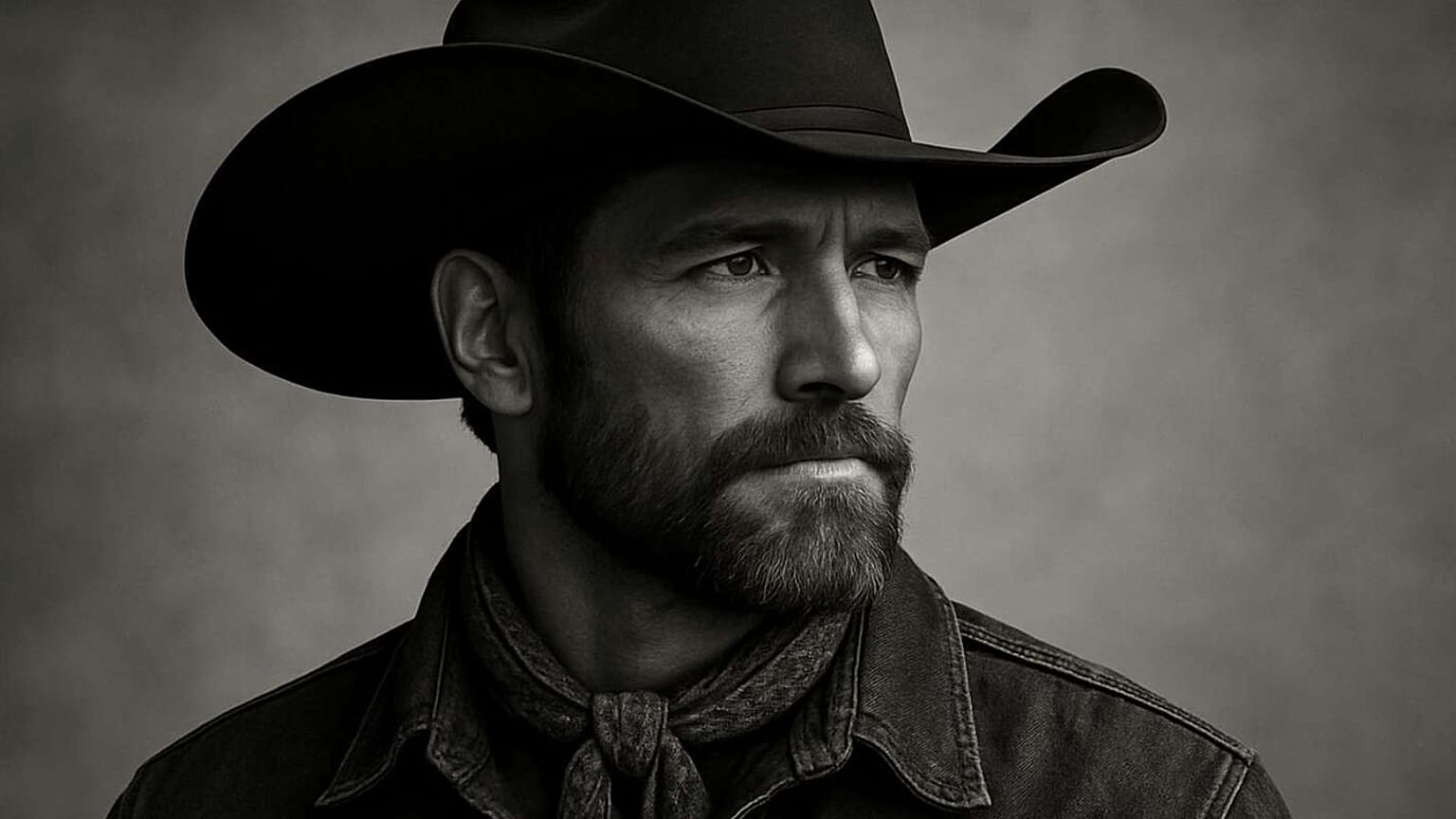

Any gluttons for clichéd dross who choose to head to the Instagram page will find several generic AI-generated videos of stubbly cowboys looking like factory-reject Ben Afflecks walking on snow-covered train tracks, lifting weights and holding their hats under the rain. In other words, stereotypical Outlaw Country fantasies that reek of fragile masculinity and necktie fetishes.

Still, fans are clearly loving it, seemingly unbothered that the “Soul Music for Us” glaringly lacks, you know, a soul.

“Love your voice! Awesome song writing! I want more” reads one comment on a video, while another writes: “I don’t know if this is a real guy but his songs are seriously some of my favorite in life.”

“I LIKE THE SONG, NO MATTER WHO CREATED IT!” screams one comment on YouTube.

Some listeners also appear not to realize that Breaking Rust isn’t human, as fans are complimenting the lyricism (strewth!) and even asking the “artist” go on tour.

This is not the first time that an AI-generated act has debuted on Billboard’s charts. One notable example is Xania Monet, who made headlines in September when the tracks ‘Let Go, Let Go’ climbed to No.3 (Gospel) and ‘How Was I Supposed To Kow’ peaked at No. 20 (R&B).

Created by Telisha “Nikki” Jones using the AI platform Suno, Monet has been a particularly visible AI “artist” – one which even triggered a bidding war to sign “her”. Hallwood Media, led by former Interscope executive Neil Jacobson, ultimately won and signed Monet to a reported multimillion-dollar deal.

Who knows whether the same will happen for Breaking Rust, but the chart-topping success does signal a continuing shift in the music industry.

There have been concerns about the use of generative AI in all creative sectors – from Hollywood with the writer and actor guild strikes and the creation of the so-called AI actress Tilly Norwood to the recent internet meltdown over Coca-Cola making their Christmas adverts entirely AI-generated. And the more AI-created bands and musicians continue to proliferate, the more real human artists will struggle to break through – let alone generate revenues from their craft.

As Josh Antonuccio, director of the School of Media Arts and Studies at Ohio University, recently told Newsweek: “Whether it’s lyrical assistance, AI-assisted ideation, or wholesale artist and song creation, AI-generated content is going to become a much more common reality and will continue to find its way into the charts.”

He added: “The real question starts to become 'will fans care about how it’s made?'”

Indeed, the success of Breaking Rust comes as a new, “first-of-its-kind" study has found that 97 per cent of people “can’t tell the difference” between real music and AI-generated music.

The survey, conducted by French streaming service Deezer and research firm Ipsos, asked around 9,000 people from eight different countries (Brazil, Canada, France, Germany, Japan, the Netherlands, the UK and the US) to listen to three tracks to determine which was fully AI-generated.

According to the report, 97 per cent of those respondents “failed” - with 52 per cent saying they felt “uncomfortable” to not know the difference.

The study also found that 55 per cent of respondents were “curious” about AI-generated music, and that 66 per cent said they would listen to it at least once, out of curiosity

However, only 19 per cent said they felt that they could trust AI, while another 51 per cent said they believe the use of AI in music production could lead to “generic” sounding music.

“The survey results clearly show that people care about music and want to know if they’re listening to AI or human made tracks or not,” said Alexis Lanternier, CEO of Deezer. “There’s also no doubt that there are concerns about how AI-generated music will affect the livelihood of artists, music creation and that AI companies shouldn’t be allowed to train their models on copyrighted material.”

Earlier this year, artists including Paul McCartney, Kate Bush, Dua Lipa and Elton John urged UK Prime Minister Keir Starmer to protect the work of creatives, with Sir Elton posting a statement saying that “creative copyright is the lifeblood of the creative industries”. He added that government proposals which let AI companies train their systems on copyright-protected work without permission left the door “wide open for an artist’s life work to be stolen.”

Sir Elton previously claimed that AI would “dilute and threaten young artists’ earnings”, a statement backed by thousands of real-life artists who continue to petition the music industry to implement safeguards related to artificial intelligence and copyright.

In February, more than 1,000 artists, including Annie Lennox, Damon Albarn and Radiohead, launched a silent album titled 'Is This What We Want?', in protest against UK government plans that could allow AI companies to use copyrighted content without consent.

The album, featuring the sounds of empty studios and performance spaces, was designed to be a symbol of the negative impact controversial government proposals could have on musicians' livelihoods.

Kate Bush, one of the leading voices in the protest, expressed her concerns by saying: "In the music of the future, will our voices go unheard?"

The question still stands and feels more urgent than ever, considering the chart-topping sounds of Breaking Rust.

Give us empty studio sounds over soulless cowboy platitudes any day of the week.

These videos of Ukrainian soldiers are

deepfakes generated from the faces of

Russian streamers

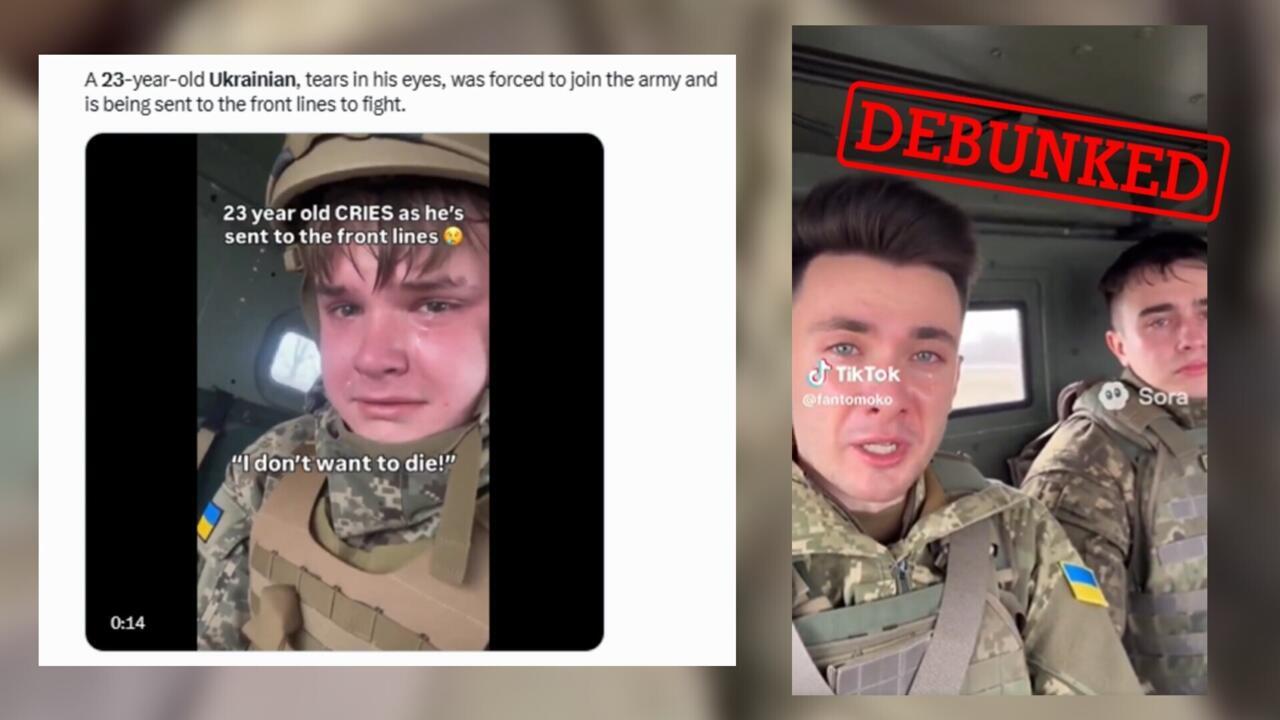

A number of videos have been circulating online that claim to show tearful Ukrainian soldiers who claim they were mobilised against their will. In reality, these videos are all deepfakes generated using the faces of Russian video game streamers.

Issued on: 13/11/2025 -

By:The FRANCE 24 Observers/

Quang Pham

These videos, which claim to show Ukrainian soldiers refusing to fight, were actually generated by artificial intelligence. They were posted on social media on November 2, 2025. © X

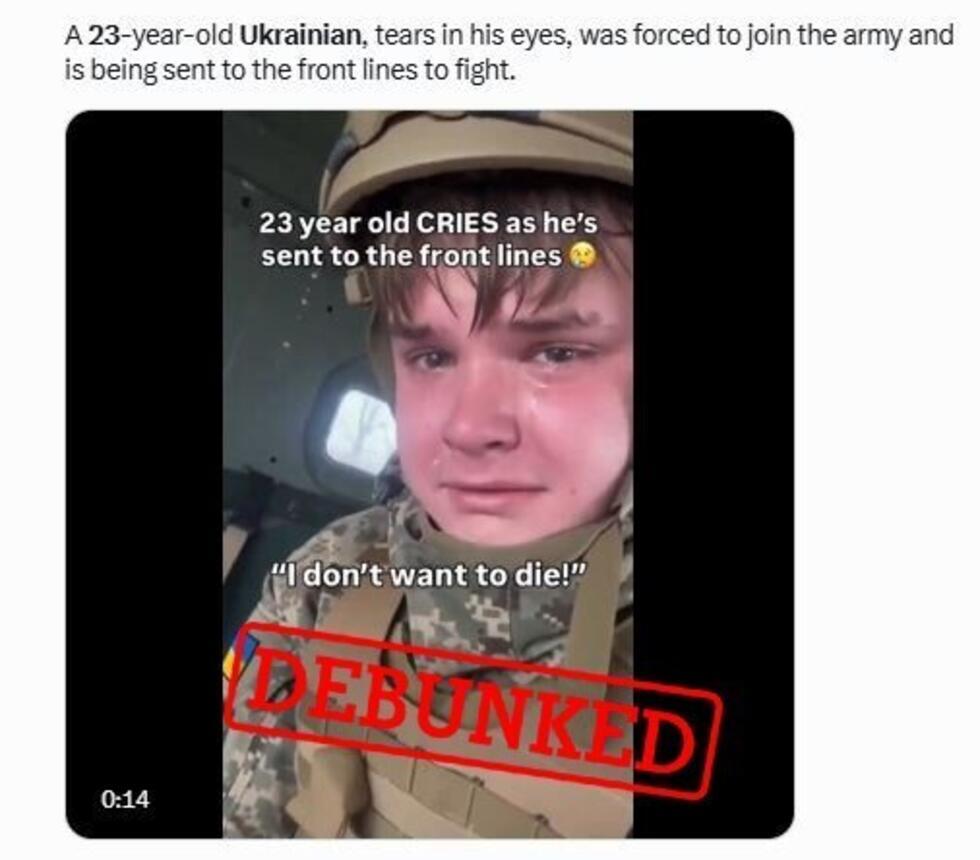

These videos, which claim to show Ukrainian soldiers refusing to fight, were actually generated by artificial intelligence. They were posted on social media on November 2, 2025. © XAt first glance, the video that has been widely shared on X and TikTok since November 2 is a tearjerker. It claims to show a young Ukrainian soldier – more boy than man – crying and saying he doesn’t want to go and fight:

"They mobilised me. I am leaving for Chasiv Yar [Editor’s note: a town in the Donetsk oblast, or administrative region, in eastern Ukraine]. Help me, I don’t want to die. I am only 23. Help me, please.”

"Ukraine is sending its young people to the slaughterhouse,” commented one social media user who posted the video on X. This user commonly shares both anti-Semitic and pro-Russian views.

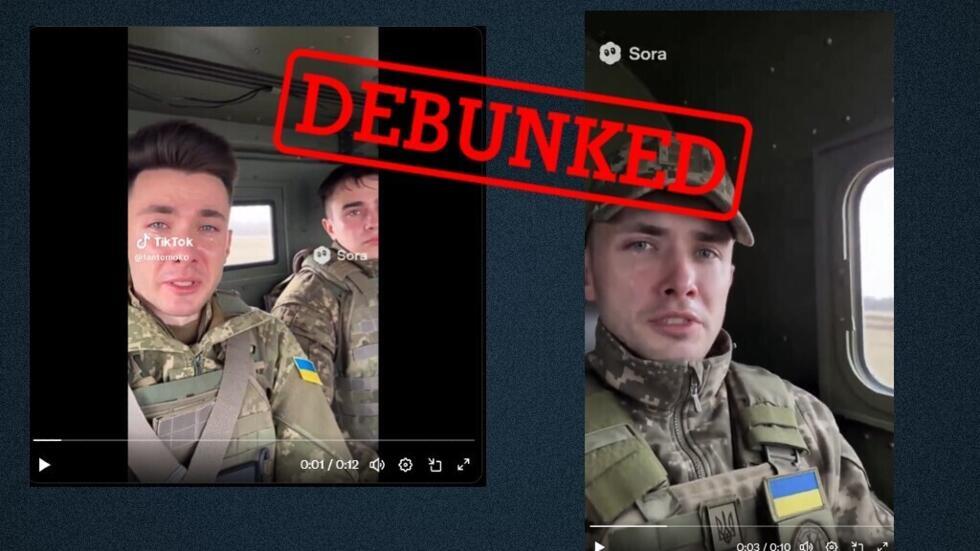

This video isn’t the only one – dozens of similar videos have also been circulating on other social media platforms, especially TikTok. These videos claim to show Ukrainian soldiers deployed against their will to Pokrovsk, an important strategic town in the Donbas region that is the epicentre of a Russian offensive:

"They are bringing us to Pokrovsk, we don’t want to go, please.

Someone help us, please.

We don’t know what to do, they are bringing us by force.

My god, mama, mama, I don’t want to."

Fake videos generated by artificial intelligence

While it is true that the Ukrainian army has been reckoning with a growing number of desertions in recent months and that many Ukrainian men do want to avoid serving, these videos are fake. They were all generated by artificial intelligence (AI).

There are a few clues.

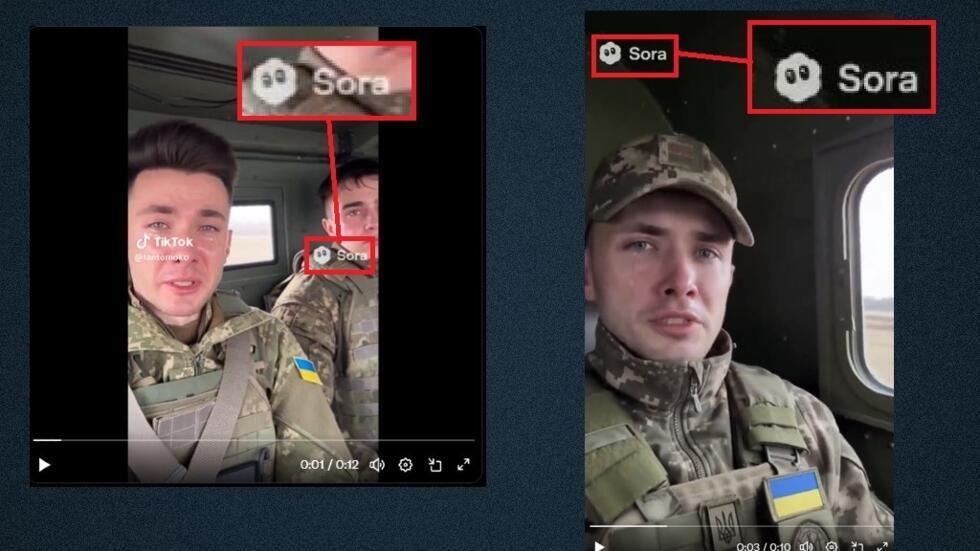

First of all, the videos of Ukrainian soldiers claiming that they don’t want to be deployed to Pokrovsk feature a watermark: an image of a small cloud and the word Sora. That’s the visual logo – or signature – of Sora 2, an artificial intelligence video generator created by OpenAI which puts these watermarks on generated videos in an attempt to prevent them from being used out of context.

The video of the 23-year-old soldier supposedly being shipped off to Chasiv Yar doesn’t have a watermark. However, we have previously reported in another article that the Sora AI watermark can be removed. And there are other clues that this video, too, was generated by AI.

First, the description of his circumstances that the soldier gives doesn’t align with how conscription actually works in Ukraine. The soldier claims that he was conscripted when he was 23. However, the Ukrainian parliament set the age for military service in Ukraine at 25. People under that age can volunteer, but they can’t be conscripted.

The helmet that the soldier is wearing also features anomalies – clues that it was generated by artificial intelligence. The man is wearing a NIJ IIIA ballistic helmet (which offers protection against 9mm bullets). However, there are differences between the helmet the “soldier” is wearing and the real helmet, which you can see on a specialist site. For example, a screw that appears round on the real helmet looks deformed in the AI-created video. The helmet in the video has a round piece that doesn’t appear on the real model. AI has a tendency to add elements when it is generating images of objects.

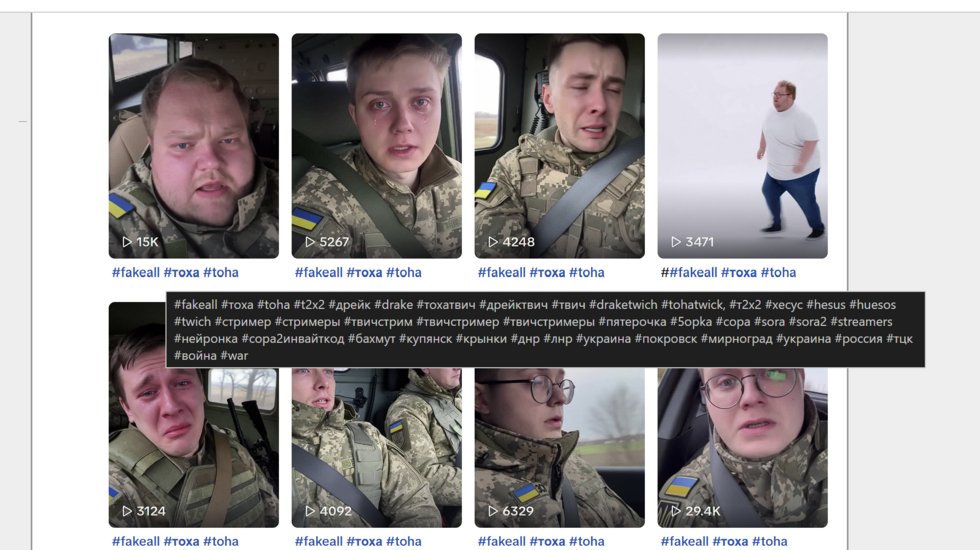

All of these videos of fake Ukrainian soldiers came from the same TikTok profile – "fantomoko". This account’s watermark appears on these videos.

The profile, now offline, seems to have published mainly fake, AI-generated videos. A large number of the videos shared by this account feature the Sora 2 watermark as well as the hashtags #fakeall and #sora2.

The Russian streamers whose identities were stolen

Italian fact-checking outlet open.online first reported a strange detail about these videos and the identity theft behind them. They seemed to be created from the faces of Russian streamers – users who stream themselves live on social media – in this case, while playing video games.

The Sora 2 AI video generator only takes a few seconds to create "deepfakes", which appear to show real people speaking with real voices but in fact are artificial.

The supposed 23-year-old soldier featured in the video was created using the face of kussia88, a Russian streamer with a Twitch profile that has 1.3 million followers.

The soldier complaining about being shipped to Pokrovsk was generated using videos of Russian streamer Aleksei Gubanov, known as "JesusAVGN". Gubanov actually opposes Russian Presdient Vladimir Putin’s regime and is now based in the United States.

Our team spoke to Aleksei Gubanov, who was horrified by the way his face was used to create these videos:

“I have no connection whatsoever to these videos – all of them were created by someone using the Sora neural network.

Moreover, I personally drew attention to these videos during my recent livestream, and I warned my audience that someone is deliberately trying to sow discontent in society by spreading such content. These materials play directly into the hands of Russian propaganda and cause serious harm to Ukraine, as they quickly gain a large number of views – and people, unfortunately, tend to believe them.”

The Centre for Countering Disinformation, a body linked to the Ukrainian government, spoke out about the video of the 23-year-old soldier. They described the video as fake news that “promotes the narrative of conscription at the age of 22-23” despite the fact that the age of military service is still 25. The aim of this disinformation campaign? "To sow distrust within Ukrainian society, disrupt mobilisation efforts and discredit Ukraine in the eyes of the international community,” the organisation said on X.

This article has been translated from the original in French.

No comments:

Post a Comment