This Is The Most Exciting Crisis in Cosmology

MICHELLE STARR

29 AUGUST 2020

For as long as there has been a Universe, space has been expanding. It winked into existence roughly 13.8 billion years ago, and has been puffing up ever since, like a giant cosmic balloon.

The current rate of this expansion is called the Hubble constant, or H0, and it's one of the fundamental measurements of the Universe.

If you know the Hubble constant, you can calculate the age of the Universe. You can calculate the size of the Universe. You can more accurately calculate the influence of the mysterious dark energy that drives the expansion of the Universe. And, fun fact, H0 is one of the values required to calculate intergalactic distances.

However, there's a huge problem. We have several highly precise methods for determining the Hubble constant... and these methods keep returning different results for an unknown reason.

It could be a problem with the calibration of our measurement techniques - the standard candles and standard rulers we use to measure cosmic distances (more on those in a moment). It could be some unknown property of dark energy.

Or perhaps our understanding of fundamental physics is incomplete. To resolve this might well require a breakthrough of the kind that earns Nobel Prizes.

So, where do we begin?

MICHELLE STARR

29 AUGUST 2020

For as long as there has been a Universe, space has been expanding. It winked into existence roughly 13.8 billion years ago, and has been puffing up ever since, like a giant cosmic balloon.

The current rate of this expansion is called the Hubble constant, or H0, and it's one of the fundamental measurements of the Universe.

If you know the Hubble constant, you can calculate the age of the Universe. You can calculate the size of the Universe. You can more accurately calculate the influence of the mysterious dark energy that drives the expansion of the Universe. And, fun fact, H0 is one of the values required to calculate intergalactic distances.

However, there's a huge problem. We have several highly precise methods for determining the Hubble constant... and these methods keep returning different results for an unknown reason.

It could be a problem with the calibration of our measurement techniques - the standard candles and standard rulers we use to measure cosmic distances (more on those in a moment). It could be some unknown property of dark energy.

Or perhaps our understanding of fundamental physics is incomplete. To resolve this might well require a breakthrough of the kind that earns Nobel Prizes.

So, where do we begin?

The basics

The Hubble constant is typically expressed with a seemingly unusual combination of distance and time units - kilometres per second per megaparsec, or (km/s)/Mpc; a megaparsec is around 3.3 million light-years.

That combination is needed because the expansion of the Universe is accelerating, therefore stuff that's farther away from us appears to be receding faster. Hypothetically, if we found that a galaxy at 1 megaparsec away was receding at a rate of 10 km/s, and a galaxy at 10 megaparsecs appeared to be receding at 100 km/s, we could describe that relation as 10 km/s per megaparsec.

In other words, determining the proportional relation between how fast galaxies are moving away from us (km/s) and how far they are (Mpc) is what gives us the value of H0.

If only there was an easy way to measure all this.

Cosmologists have devised a number of ways to arrive at the Hubble constant, but there are two main methods. They involve either standard rulers, or standard candles.

Standard rulers and their signals

Standard rulers are based on signals from a time in the early Universe called the Epoch of Recombination. After the Big Bang, the Universe was so hot and dense, atoms couldn't form. Instead, there existed only a hot, opaque plasma fog; after about 380,000 years of cooling and expansion, that plasma finally started recombining into atoms.

We rely on two signals from this period. The first is the cosmic microwave background (CMB) - the light that escaped the plasma fog as matter recombined, and space became transparent. This first light - faint as it is by now - still fills the Universe uniformly in all directions.

Fluctuations in the temperature of the CMB represent expansions and contractions in the early Universe, to be incorporated into calculations that let us infer our Universe's expansion history.

The second signal is called the baryon acoustic oscillation, and it's the result of spherical acoustic density waves that propagated through the plasma fog of the early Universe, coming to a standstill at the Epoch of Recombination.

The distance this acoustic wave could have travelled during this timeframe is approximately 150 megaparsecs; this is detectable in density variations throughout the history of the Universe, providing a 'ruler' whereby to measure distances.

Standard candles in the sky

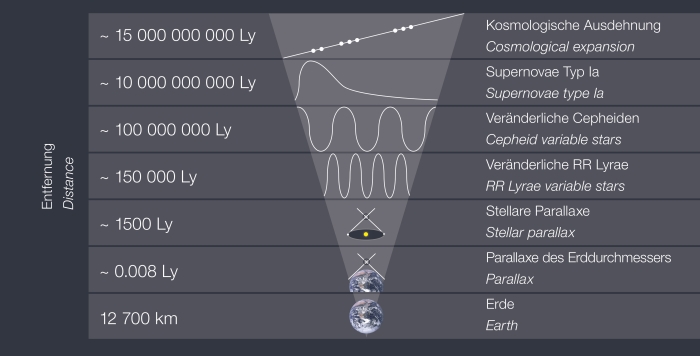

Standard candles, on the other hand, are distance measurements based on objects in the local Universe. These can't just be any old stars or galaxies - they need to be objects of known intrinsic brightness, such as Type Ia supernovae, Cepheid variable stars, or stars at the tip of the red giant branch.

"When you're looking at the stars in the sky, you can measure their positions left and right really precisely, you can point at them really precisely, but you can't tell how far away they are," astrophysicist Tamara Davis, from the University of Queensland in Australia, told ScienceAlert.

"It's really difficult to tell the difference between something that's really bright and far away, or something that's faint and close. So, the way people measure it is to find something that's standard in some way. A standard candle is something of known brightness."

Both standard rulers and standard candles are as precise as we can get them, which is to say - very. And they both return different results when used to calculate the Hubble constant.

According to standard rulers, that is, the early Universe, H0 is around 67 kilometres per second per megaparsec. For the standard candles - the local Universe - it's around 74 kilometres per second per megaparsec.

Neither of these results have an error margin that comes even close to closing the gap between them.

The history of the gap

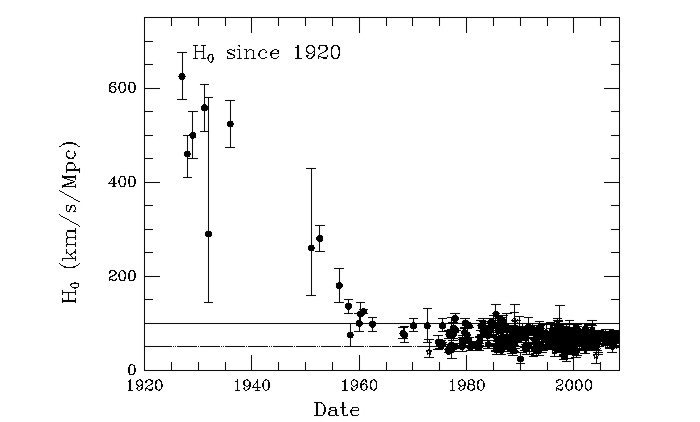

Astronomers Alexander Friedmann and Georges Lemaître first noticed that the Universe was expanding all the way back in the 1920s. By 1929, Edwin Hubble calculated the rate of expansion based on standard candles called Cepheid variable stars, which periodically vary in brightness; since the timing of that variability is linked to these stars' intrinsic brightness, they make for an excellent distance measurement tool.

But the distance calibrations weren't quite right, which carried over into the cosmic distance measurements. Thus, the early calculations returned a H0 of around 500 kilometres per second per megaparsec.

"There was an immediate problem discovered with that because geologists, who were studying Earth, knew that Earth was something like 4 billion years old," Davis said.

"If you calculated the rate of expansion as 500 km/s, you can calculate how long it would have taken to get to the current size of the Universe, and that would have been about 2 billion years. That meant Earth was older than the Universe - which is not possible - and so people went bah! this 'expansion of the Universe' thing is all a farce."

That's where the Hubble constant remained until around the 1950s, when German astronomer Walter Baade discovered that there are two types of Cepheid variable stars, allowing for a refined calculation of the Hubble constant. It was brought down to around 100 (km/s)/Mpc.

(John Huchra/Harvard-Smithsonian Center for Astrophysics)

From there, you know how it goes - you can see the progression on the graph above. As our technology, techniques, and understanding grew ever more refined, so too did the Hubble constant calculations, along with our confidence in them.

"We used to have error bars of plus or minus 50," Davis said. "Now we have error bars of plus or minus 1 or 2. Because the measurements have become so good, these techniques are now sufficiently different that it's hard to explain by measurement errors."

What's the big deal?

Today, the difference between the two values, known as the Hubble tension, may not seem like a large number - just 9.4 percent.

But cosmologists are yet to figure out wherein lies the cause of this discrepancy. The most obvious problem would be one of calibration, but its source remains elusive.

Several different teams, for instance, have calculated H0 from the CMB based on measurements obtained by the Planck space observatory. It's possible the problem could lie with our interpretation of the data; but a 2019 CMB survey by a different instrument, the Atacama Cosmology Telescope, agreed with the Planck data.

In addition, H0 calculations from the baryon acoustic oscillation measured by an entirely different instrument, the Sloan Digital Sky Survey, returned the same result.

Perhaps our standard candles are leading us astray, too. These objects are grouped into stages, forming the 'cosmic distance ladder'. First up, parallax - how nearby stars seem to change position against more distant stars - is used to validate the distances to the two types of variable stars.

From there, you know how it goes - you can see the progression on the graph above. As our technology, techniques, and understanding grew ever more refined, so too did the Hubble constant calculations, along with our confidence in them.

"We used to have error bars of plus or minus 50," Davis said. "Now we have error bars of plus or minus 1 or 2. Because the measurements have become so good, these techniques are now sufficiently different that it's hard to explain by measurement errors."

What's the big deal?

Today, the difference between the two values, known as the Hubble tension, may not seem like a large number - just 9.4 percent.

But cosmologists are yet to figure out wherein lies the cause of this discrepancy. The most obvious problem would be one of calibration, but its source remains elusive.

Several different teams, for instance, have calculated H0 from the CMB based on measurements obtained by the Planck space observatory. It's possible the problem could lie with our interpretation of the data; but a 2019 CMB survey by a different instrument, the Atacama Cosmology Telescope, agreed with the Planck data.

In addition, H0 calculations from the baryon acoustic oscillation measured by an entirely different instrument, the Sloan Digital Sky Survey, returned the same result.

Perhaps our standard candles are leading us astray, too. These objects are grouped into stages, forming the 'cosmic distance ladder'. First up, parallax - how nearby stars seem to change position against more distant stars - is used to validate the distances to the two types of variable stars.

(design und mehr)

The next step out from variable stars is extragalactic Type Ia supernovae. It's like climbing a ladder farther and farther out into the cosmos, and "even a tiny error in one of the steps can propagate into a larger error later," Davis pointed out.

Other attempts to tackle the problem involve thinking about the very space that surrounds us in a different way.

The Hubble bubble hypothesis, for example, is based on the idea that the Milky Way is located in a relatively low-density 'bubble' in the Universe, surrounded by higher density material. The gravitational effect of this higher density material would pull on the space inside the bubble, making it so that the local space appears to expand at a faster rate than the early Universe.

Even if all of the above were indeed contributing to the problem, this would hardly add up to that 9.4 percent discrepancy, however.

"People have been quite inventive in coming up with possible ways that the methods could go wrong. And so far, no one has convincingly argued any one particular error could explain the differences that we see," cosmologist Matthew Colless, from the Australian National University, told ScienceAlert.

"It's possible that a whole bunch of different small errors all lined up the same way; but these sources of error are not related to each other. It would be very surprising and extremely unlucky if it just so happened that every different sort of error we made, all piled up in one direction and took us one way."

Maybe the blame lies with physics?

In pretty much all other respects, our cosmological models work remarkably well. Thus, if you try to alter one of the basic components of the Hubble constant, something else tends to break.

"You can change the standard ruler," Colless said, "but then you break some other observation that's been made - the amount of matter in the Universe, the mass of neutrinos - things like that, well measured and explained by the current model, but broken by the changes you need to make to 'fix' the standard ruler."

Which leads to - what the heck are we missing? Is it a problem with... fundamental physics?

"I am pretty soundly thinking that it's likely to be an error," Davis noted. "But it is genuinely difficult to explain where that error could have come from in the current measurements. So I'm almost 50-50. It's an intriguing discrepancy. And it's really interesting to try and figure out why."

If our options are "humans stuffed something up" and "actually, physics is wrong", the blame typically tends to fall on the former.

Actually, that's an understatement. "New physics" is an exceedingly rare answer. But the Hubble tension is a slippery problem, defying every attempt at a solution cosmologists can come up with.

Which makes it an incredibly exciting one.

The next step out from variable stars is extragalactic Type Ia supernovae. It's like climbing a ladder farther and farther out into the cosmos, and "even a tiny error in one of the steps can propagate into a larger error later," Davis pointed out.

Other attempts to tackle the problem involve thinking about the very space that surrounds us in a different way.

The Hubble bubble hypothesis, for example, is based on the idea that the Milky Way is located in a relatively low-density 'bubble' in the Universe, surrounded by higher density material. The gravitational effect of this higher density material would pull on the space inside the bubble, making it so that the local space appears to expand at a faster rate than the early Universe.

Even if all of the above were indeed contributing to the problem, this would hardly add up to that 9.4 percent discrepancy, however.

"People have been quite inventive in coming up with possible ways that the methods could go wrong. And so far, no one has convincingly argued any one particular error could explain the differences that we see," cosmologist Matthew Colless, from the Australian National University, told ScienceAlert.

"It's possible that a whole bunch of different small errors all lined up the same way; but these sources of error are not related to each other. It would be very surprising and extremely unlucky if it just so happened that every different sort of error we made, all piled up in one direction and took us one way."

Maybe the blame lies with physics?

In pretty much all other respects, our cosmological models work remarkably well. Thus, if you try to alter one of the basic components of the Hubble constant, something else tends to break.

"You can change the standard ruler," Colless said, "but then you break some other observation that's been made - the amount of matter in the Universe, the mass of neutrinos - things like that, well measured and explained by the current model, but broken by the changes you need to make to 'fix' the standard ruler."

Which leads to - what the heck are we missing? Is it a problem with... fundamental physics?

"I am pretty soundly thinking that it's likely to be an error," Davis noted. "But it is genuinely difficult to explain where that error could have come from in the current measurements. So I'm almost 50-50. It's an intriguing discrepancy. And it's really interesting to try and figure out why."

If our options are "humans stuffed something up" and "actually, physics is wrong", the blame typically tends to fall on the former.

Actually, that's an understatement. "New physics" is an exceedingly rare answer. But the Hubble tension is a slippery problem, defying every attempt at a solution cosmologists can come up with.

Which makes it an incredibly exciting one.

Most of these specks are galaxies. (NASA, ESA, S. Beckwith (STScI) and the HUDF Team)

It's possible there's something general relativity hasn't accounted for. That would be wild: Einstein's theory has survived test after cosmic test. But we can't discount the possibility.

Naturally, there are other possibilities as well, such as the huge unknown of dark energy. We don't know what dark energy is, but it seems to be a fundamental force, responsible for the negative pressure that's accelerating the expansion of our Universe. Maybe.

"Our only vague idea is that it is Einstein's cosmological constant, the energy of the vacuum," said Colless. "But we don't really know exactly how that works, because we don't have a convincing way for predicting what the value of the cosmological constant should be."

Alternatively, it could be some hole in our understanding of gravity, although "new physics that affects a theory of fundamental and general relativity is extremely rare," Colless pointed out.

"If there was new physics, and if it turned out to require a modification to general relativity, that would definitely be Nobel Prize-level breakthrough physics."

The only way forward

Whether it's a calibration error, a huge mistake in our current understanding of physics, or something else altogether, there is only one way forward if we're going to fix the Hubble constant - doing more science.

Firstly, cosmologists can work with the current data we already have on standard candles and standard rulers, refining them further and reducing the error bars even more. To supplement this, we can also obtain new data.

Colless, for instance, is working on a project in Australia using the cutting-edge TAIPAN instrument newly installed at Siding Spring Observatory. That team will be surveying millions of galaxies in the local Universe to measure the baryon acoustic oscillation as close to us as possible, to account for any measurement problems produced by the distance.

"We're going to measure 2 million very nearby galaxies - over the whole Southern Hemisphere and a little bit of the Northern Hemisphere - as nearby as we possibly can, look for this signal of baryon acoustic oscillation, and measure that scale with 1 percent precision at very low redshift."

This is the same volume of space that the distance ladders cover. So, if the TAIPAN results in that same volume return an H0 of 67 kilometres per second per megaparsec, the problem might lie with our standard candles.

On the other hand, if the results are closer to 74 kilometres per second per megaparsec, this would suggest the standard candles are more robust.

Emerging research fields are also an option; not standard candles or standard rulers, but standard sirens, based on gravitational wave astronomy - the ripples in spacetime propagated by massive collisions between black holes and neutron stars.

It's possible there's something general relativity hasn't accounted for. That would be wild: Einstein's theory has survived test after cosmic test. But we can't discount the possibility.

Naturally, there are other possibilities as well, such as the huge unknown of dark energy. We don't know what dark energy is, but it seems to be a fundamental force, responsible for the negative pressure that's accelerating the expansion of our Universe. Maybe.

"Our only vague idea is that it is Einstein's cosmological constant, the energy of the vacuum," said Colless. "But we don't really know exactly how that works, because we don't have a convincing way for predicting what the value of the cosmological constant should be."

Alternatively, it could be some hole in our understanding of gravity, although "new physics that affects a theory of fundamental and general relativity is extremely rare," Colless pointed out.

"If there was new physics, and if it turned out to require a modification to general relativity, that would definitely be Nobel Prize-level breakthrough physics."

The only way forward

Whether it's a calibration error, a huge mistake in our current understanding of physics, or something else altogether, there is only one way forward if we're going to fix the Hubble constant - doing more science.

Firstly, cosmologists can work with the current data we already have on standard candles and standard rulers, refining them further and reducing the error bars even more. To supplement this, we can also obtain new data.

Colless, for instance, is working on a project in Australia using the cutting-edge TAIPAN instrument newly installed at Siding Spring Observatory. That team will be surveying millions of galaxies in the local Universe to measure the baryon acoustic oscillation as close to us as possible, to account for any measurement problems produced by the distance.

"We're going to measure 2 million very nearby galaxies - over the whole Southern Hemisphere and a little bit of the Northern Hemisphere - as nearby as we possibly can, look for this signal of baryon acoustic oscillation, and measure that scale with 1 percent precision at very low redshift."

This is the same volume of space that the distance ladders cover. So, if the TAIPAN results in that same volume return an H0 of 67 kilometres per second per megaparsec, the problem might lie with our standard candles.

On the other hand, if the results are closer to 74 kilometres per second per megaparsec, this would suggest the standard candles are more robust.

Emerging research fields are also an option; not standard candles or standard rulers, but standard sirens, based on gravitational wave astronomy - the ripples in spacetime propagated by massive collisions between black holes and neutron stars.

Animation of two neutron stars colliding. (Caltech/YouTube)

"They're similar to the supernovae in that we know how bright they are intrinsically," Davis said.

"Basically, it's like a standard candle. It's sometimes called a standard siren, because the frequency of the gravitational waves tells you how bright it is. Because we know - from general relativity - the relationship between the frequency and the brightness, we don't have to do any calibration. We just have a number, which makes it much, much cleaner than some of these other methods."

It's still hard to measure the Hubble constant with gravitational waves. But initial calculations are promising. In 2017, neutron star collision allowed astronomers to narrow it down to around 70 (km/s)/Mpc, with error bars large enough on either side to cover both 67 and 74, and then some.

That, Davis said, was stunning.

"We've measured thousands of supernovae now," she said. "We've measured millions of galaxies to measure the baryon acoustic oscillation, we've surveyed the entire sky to measure the cosmic microwave background.

"And this single object, this one measurement of a gravitational wave, got an error bar that was about 10 percent, which took decades of work on the other probes."

Gravitational wave astronomy is still in its infancy - it's only a matter of time before we detect enough neutron star collisions to sufficiently refine those results. With luck, that will help ferret out the cause of the Hubble tension.

Either way, it's going to make history. New physics would, of course, be amazing - but an error in the distance ladder would rock astronomy. It could mean that there's something we don't understand about Type Ia supernovae, or how stars evolve.

Whichever way it shakes out, solving the Hubble tension will have effects that ripple out across astronomical science.

"That's why cosmologists are so excited about this. Because cosmological theory works so well, we're so excited when we find something that it failed to predict. Because when things break, that's when you learn," Colless said.

"Science is all about trial and error - and it's in the error that you learn something new."

"They're similar to the supernovae in that we know how bright they are intrinsically," Davis said.

"Basically, it's like a standard candle. It's sometimes called a standard siren, because the frequency of the gravitational waves tells you how bright it is. Because we know - from general relativity - the relationship between the frequency and the brightness, we don't have to do any calibration. We just have a number, which makes it much, much cleaner than some of these other methods."

It's still hard to measure the Hubble constant with gravitational waves. But initial calculations are promising. In 2017, neutron star collision allowed astronomers to narrow it down to around 70 (km/s)/Mpc, with error bars large enough on either side to cover both 67 and 74, and then some.

That, Davis said, was stunning.

"We've measured thousands of supernovae now," she said. "We've measured millions of galaxies to measure the baryon acoustic oscillation, we've surveyed the entire sky to measure the cosmic microwave background.

"And this single object, this one measurement of a gravitational wave, got an error bar that was about 10 percent, which took decades of work on the other probes."

Gravitational wave astronomy is still in its infancy - it's only a matter of time before we detect enough neutron star collisions to sufficiently refine those results. With luck, that will help ferret out the cause of the Hubble tension.

Either way, it's going to make history. New physics would, of course, be amazing - but an error in the distance ladder would rock astronomy. It could mean that there's something we don't understand about Type Ia supernovae, or how stars evolve.

Whichever way it shakes out, solving the Hubble tension will have effects that ripple out across astronomical science.

"That's why cosmologists are so excited about this. Because cosmological theory works so well, we're so excited when we find something that it failed to predict. Because when things break, that's when you learn," Colless said.

"Science is all about trial and error - and it's in the error that you learn something new."

No comments:

Post a Comment