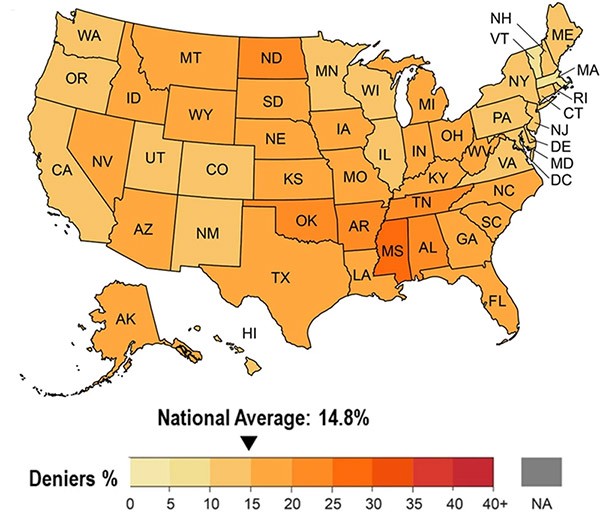

Just under 15% of people in the United States deny that climate change is happening, according to an analysis of more than seven million social media posts.

The logo of Neuralink, a brain-implant company founded by Elon Musk, superimposed on an illustration of the human brain.Credit: Jonathan Raa/NurPhoto via Getty

Neuralink’s ‘brain reader’ underwhelming

The first person to receive a brain-monitoring device from neurotechnology company Neuralink can move a computer cursor with their mind, founder Elon Musk announced last week. Researchers are concerned by the secrecy surrounding the device’s safety and performance — and underwhelmed by the achievement. “A human controlling a cursor is nothing new,” says brain–computer interface researcher Bolu Ajiboye.

When chatbots go off the rails

Last week, ChatGPT suddenly started spewing nonsense, mixing English and Spanish, making up words or repeating the same phrase over and over. Less than 24 hours later, the chatbot’s creator OpenAI reported that it had fixed the bug, which it said was introduced by an “optimization to the user experience”.

Meanwhile, Google has paused the ability of its AI tool Gemini to generate portraits of people after it was criticized for creating images of historically white figures (such as the US Founding Fathers or Nazi-era German soldiers) as people of colour. Prabhakar Raghavan, Google's senior vice president, explains that Gemini depicts a range of ethnicities and other characteristics whenever users don't ask for specifics. "Our tuning to ensure that Gemini showed a range of people failed to account for cases that should clearly not show a range."

“In the end, generative AI is a kind of alchemy,” says psychologist Gary Marcus about the ChatGPT glitch. “The reality, though, is that these systems have never been stable.” The episode shows that we need to create more-transparent and tractable systems, he argues.

The Register | 4 min read & The Verge | 3 min read

AI reveals extent of climate denialism

Just under 15% of people in the United States deny that climate change is happening, according to an AI-supported analysis of more than seven million posts on X (formerly called Twitter). A deep-learning model was used to classify the social media posts as either believing or denying climate change. “What is scary, and somewhat disheartening, is how divided the worlds are between climate change belief and denial,” says sustainability researcher and study co-author Joshua Newell. “The respective X echo chambers have little communication and interaction between them.”

Scientific American | 4 min read

Reference: Scientific Reports paper

(Gounaridis, D., Newell, J.P./Sci Rep)

Features & opinion

AI’s environmental costs are soaring

Within years, AI systems could consume as much energy as entire countries. A first-of-its-kind US bill would create a framework for reporting the technology’s environmental costs on a voluntary basis — but given the urgency of the situation, that’s not enough, argues AI scholar Kate Crawford. “Legislators should offer both carrots and sticks” to push AI companies towards greater ecological sustainability, she writes: incentives for creating energy-efficient systems and using renewable energy could be supported by regular environmental audits.

Reality check for AI in drug discovery

In a first-of-its-kind test of AI’s ability to come up with promising starting points for new medicines, 23 teams participated in a drug-discovery competition, predicting over 2,000 compounds that could bind to an enzyme associated with Parkinson’s disease. Fewer than a dozen actually bound to the enzyme when tested in the lab. “We're at less than a 1% hit rate,” says computational chemist and competition coordinator Matthieu Schapira. Several top methods used machine learning, but classical methods such as ultra-high throughput docking still kept up. “The question is, do we need really complicated, advanced AI technologies at every stage of the process?” asks pharmaceutical researcher Lukas Friedrich, who was a top-scoring competitor in the challenge.

Nature Reviews Drug Discovery | 10 min read

Can AI restore jobs computers killed?

“AI — used well — can assist with restoring the middle-skill, middle-class heart of the US labour market that has been hollowed out by automation and globalization,” argues economist David Autor. By making information and calculation cheap and abundant, Autor writes, “it will reshape the value and nature of human expertise”.

Algorithm distils observations into rules

Researchers have designed a deep-learning algorithm that generates computer code on the basis of observations. This ‘deep distilling’ could help unravel the laws underlying physical systems where simple rules give rise to complex self-organizing patterns — such as finding the relationship between DNA sequences and their function. It could also make it easier for people to understand what AI systems learn from data, writes computational scientist Joseph Bakarji. Many algorithms are ‘black boxes’ — researchers and users typically know the inputs and outputs, but it is hard to see what’s going on inside. “This lack of transparency raises questions about their reliability, safety, and trustworthiness,” Bakarji says.

Nature Computational Science | 5 min read

Reference: Nature Computational Science paper

‘Deep distilling’ can extract the rules from John Conway’s classic ‘Game of Life’ by observing iterations of the game and presenting its findings as an executable computer code that simulates the game. (Lev Kalmykov via Wikimedia Commons (CC BY-SA 4.0))

doi: https://doi.org/10.1038/d41586-024-00607-6

No comments:

Post a Comment