New Research Shows Estimates of the Carbon Cycle – Vital to Predicting Climate Change – Are Incorrect

The findings do not counter the established science of climate change but highlight how the accounting of the amount of carbon withdrawn by plants and returned by soil is not accurate.

Virginia Tech researchers, in collaboration with Pacific Northwest National Laboratory, have discovered that key parts of the global carbon cycle used to track movement of carbon dioxide in the environment are not correct, which could significantly alter conventional carbon cycle models.

The estimate of how much carbon dioxide plants pull from the atmosphere is critical to accurately monitor and predict the amount of climate-changing gasses in the atmosphere. This finding has the potential to change predictions for climate change, though it is unclear at this juncture if the mismatch will result in more or less carbon dioxide being accounted for in the environment.

“Either the amount of carbon coming out of the atmosphere from the plants is wrong or the amount coming out of the soil is wrong,” said Meredith Steele, an assistant professor in the School of Plant and Environmental Sciences in the College of Agriculture and Life Sciences, whose Ph.D. student at the time, Jinshi Jian, led the research team. The findings were published on April 1, 2022, in Nature Communications.

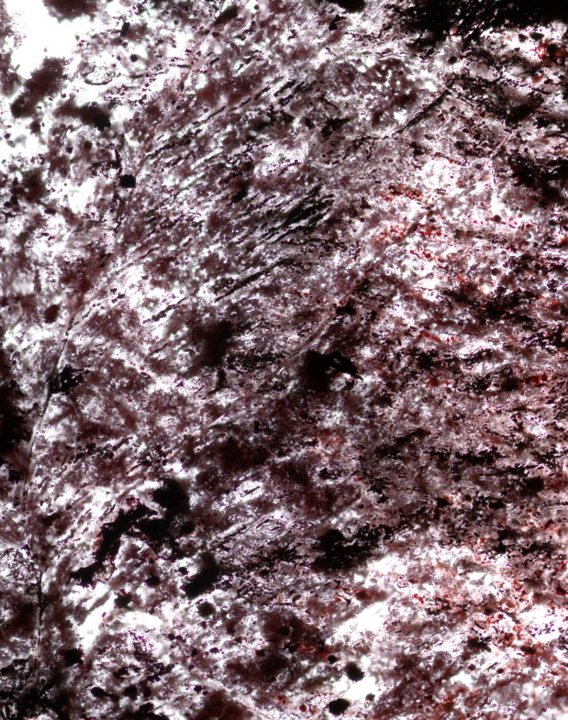

“We are not challenging the well-established climate change science, but we should be able to account for all carbon in the ecosystem and currently cannot. What we found is that the models of the ecosystem’s response to climate change need updating,” said Meredith Steele. Credit: Photo by Logan Wallace for Virginia Tech.

“We are not challenging the well-established climate change science, but we should be able to account for all carbon in the ecosystem and currently cannot,” she said. “What we found is that the models of the ecosystem’s response to climate change need updating.”

Jian and Steele’s work focuses on carbon cycling and how plants and soil remove and return carbon dioxide in the atmosphere.

To understand how carbon affects the ecosystems on Earth, it’s important to know exactly where all the carbon is going. This process, called carbon accounting, says how much carbon is going where, how much is in each of Earth’s carbon pools of the oceans, atmosphere, land, and living things.

For decades, researchers have been trying to get an accurate accounting of where our carbon is and where it is going. Virginia Tech and Pacific Northwest National Laboratory researchers focused on the carbon dioxide that gets drawn out of the atmosphere by plants through photosynthesis.

When animals eat plants, the carbon moves into the terrestrial ecosystem. It then moves into the soil or to animals. And a large amount of carbon is also exhaled — or respirated — back into the atmosphere.

This carbon dioxide that’s coming in and going out is essential for balancing the amount of carbon in the atmosphere, which contributes to climate change and storing carbon long-term.

However, Virginia Tech researchers discovered that when using the accepted numbers for soil respiration, that number in the carbon cycling models is no longer balanced.

“Photosynthesis and respiration are the driving forces of the carbon cycle, however the total annual sum of each of these at the global scale has been elusive to measure,” said Lisa Welp, an associate professor of earth, atmospheric, and planetary sciences at Purdue University, who is familiar with the work but was not part of the research. “The authors’ attempts to reconcile these global estimates from different communities show us that they are not entirely self-consistent and there is more to learn about these fundamental processes on the planet.”

What Jian and Steele, along with the rest of the team, found is that by using the gross primary productivity of carbon dioxide’s accepted number of 120 petagrams — each petagram is a billion metric tons — the amount of carbon coming out through soil respiration should be in the neighborhood of 65 petagrams.

By analyzing multiple fluxes, the amount of carbon exchanged between Earth’s carbon pools of the oceans, atmosphere, land, and living things, the researchers discovered that the amount of carbon soil respiration coming out of the soil is about 95 petagrams. The gross primary productivity should be around 147. For scale, the difference between the currently accepted amount of 120 petagrams and this is estimate is about three times the global fossil fuel emissions each year.

According to the researchers, there are two possibilities for this. The first is that the remote sensing approach may be underestimating gross primary production. The other is the upscaling of soil respiration measurements, which could be overestimating the amount of carbon returned to the atmosphere. Whether this misestimate is a positive or negative thing for the scientifically proven challenge of climate change is what needs to be examined next, Steele said.

The next step for the research is to determine which part of the global carbon cycling model is being under or overestimated.

By having accurate accounting of the carbon and where it is in the ecosystem, better predictions and models will be possible to accurately judge these ecosystems’ response to climate change, said Jian, who began this research as a Ph.D. student at Virginia Tech and is now at Northwest A&F University in China.

“If we think back to how the world was when we were young, the climate has changed,” Jian said. “We have more extreme weather events. This study should improve the models we used for carbon cycling and provide better predictions of what the climate will look like in the future.”

As Steele’s first Ph.D. student at Virginia Tech, a portion of Steele’s startup fund went to support Jian’s graduate research. Jian, fascinated with data science, databases, and soil respiration, was working on another part of his dissertation when he stumbled across something that didn’t quite add up.

Jian was researching how to take small, localized carbon measurements from across the globe. While researching this, Jian discovered that the best estimates didn’t match up if all the fluxes of global carbon accounting were put together.

Reference: “Historically inconsistent productivity and respiration fluxes in the global terrestrial carbon cycle” by Jinshi Jian, Vanessa Bailey, Kalyn Dorheim, Alexandra G. Konings, Dalei Hao, Alexey N. Shiklomanov, Abigail Snyder, Meredith Steele, Munemasa Teramoto, Rodrigo Vargas and Ben Bond-Lamberty, 1 April 2022, Nature Communications.

DOI: 10.1038/s41467-022-29391-5

The research was funded by Steele’s startup fund from the College of Agriculture and Life Sciences at Virginia Tech and further supported by the Pacific Northwest National Laboratory.