by Ingrid Fadelli , Tech Xplore

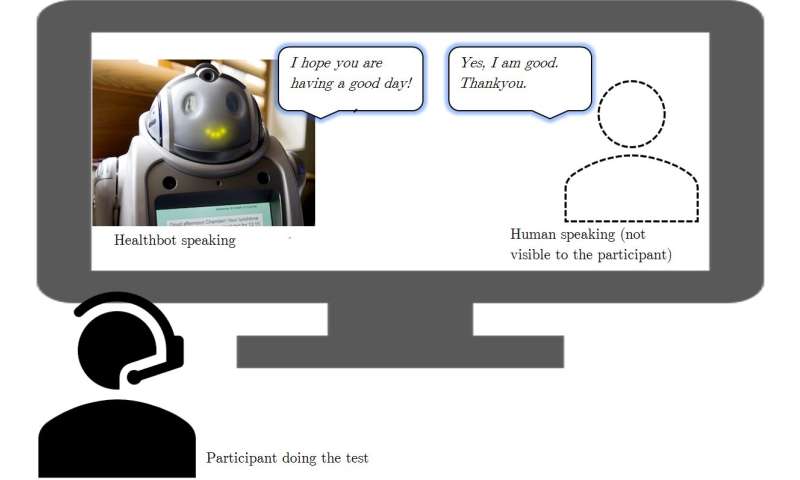

Healthbot, a Healthcare robot developed at The University of Auckland. In the image a user is interacting with Healthbot using a touch screen. (Credit: Centre for Automation and Robotic Engineering Science, University of Auckland)

Robots are gradually making their way into hospitals and other clinical facilities, providing basic assistance to doctors and patients. To facilitate their widespread use in health care settings, however, robotics researchers need to ensure that users feel at ease with robots and accept the help they can offer. This could potentially be achieved by developing robots that communicate in empathetic and compassionate ways.

With this in mind, researchers at the University of Auckland and Singapore University of Technology & Design have been using speech synthesis techniques to create robots that sound more empathetic. In a recent paper published in the International Journal of Social Robotics, they presented the results of an experiment exploring the effects of using an empathetic synthesized voice on users' perception of robots.

"Our recent study is based on approximately three years of research aimed at developing a synthetic voice for health care robots," Jesin James, one of the researchers who carried out the study told TechXplore. "Past studies have shown that the type of synthesized voice used by robots can impact how users perceive them, which can encourage or discourage users from initiating interactions."

The speech research group at the University of Auckland and the Center for Automation and Robotic Engineering Science have been trying to develop health care robots that can assist people in care homes for several years now. Recently, they have been focusing their efforts on trying to identify voices that could make robots more acceptable in the eyes of humans they interact with.

"A lot of research and development efforts in robotics focus on broadening the capabilities of robots," James explained. "However, users may be entirely discouraged from using robots if they perceive a lack of reciprocal empathy. We felt that a robot's voice plays an important role in how people perceive it, which is what ultimately inspired us to carry out our recent study."

Robots are gradually making their way into hospitals and other clinical facilities, providing basic assistance to doctors and patients. To facilitate their widespread use in health care settings, however, robotics researchers need to ensure that users feel at ease with robots and accept the help they can offer. This could potentially be achieved by developing robots that communicate in empathetic and compassionate ways.

With this in mind, researchers at the University of Auckland and Singapore University of Technology & Design have been using speech synthesis techniques to create robots that sound more empathetic. In a recent paper published in the International Journal of Social Robotics, they presented the results of an experiment exploring the effects of using an empathetic synthesized voice on users' perception of robots.

"Our recent study is based on approximately three years of research aimed at developing a synthetic voice for health care robots," Jesin James, one of the researchers who carried out the study told TechXplore. "Past studies have shown that the type of synthesized voice used by robots can impact how users perceive them, which can encourage or discourage users from initiating interactions."

The speech research group at the University of Auckland and the Center for Automation and Robotic Engineering Science have been trying to develop health care robots that can assist people in care homes for several years now. Recently, they have been focusing their efforts on trying to identify voices that could make robots more acceptable in the eyes of humans they interact with.

"A lot of research and development efforts in robotics focus on broadening the capabilities of robots," James explained. "However, users may be entirely discouraged from using robots if they perceive a lack of reciprocal empathy. We felt that a robot's voice plays an important role in how people perceive it, which is what ultimately inspired us to carry out our recent study."

A participant taking part in the perception test carried out by the researchers. (Credit: James et al.)

First, James and her colleagues tested the hypothesis that a robot's voice can impact how users perceive it by conducting a simple experiment using a robot called Healthbot. The robot's voice was that of a professional voice artist, who was recorded while reading dialogs in two tone variations: a flat monotone and an empathetic voice.

The researchers recruited 120 participants and asked them to share their perceptions after they had watched videos of Healthbot talking with these two different voices. The vast majority of participants said that they perceived the robot as more empathetic when it spoke using the more empathetic voice. These initial results encouraged the researchers to explore the possibility of producing a synthetic voice that reproduced the empathetic tone used by the professional voice artist.

"Our study had two key objectives," James said. "One was to determine what type of synthesized voice is best for creating a health care robot that is perceived as empathetic. Once we identified it, we tried to use speech synthesis techniques to produce this voice."

When they analyzed the findings of a short pilot study, the researchers realized that the emotions that most influenced whether a human user perceived a voice as empathetic or not were not the primary emotions (i.e., anger, sadness, joy and fear), but more subtle, secondary emotions. These are complex emotions that are often conveyed by the tone of voice of human speakers, which could be, for instance, apologetic, anxious, confident, enthusiastic or worried. This realization inspired James and her colleagues to compile a new dataset of speech recordings conveying these secondary emotions, called JLCorpus.

By analyzing speech samples from this dataset, the team was able to produce a model that outlined the emotional qualities that a synthesized voice should have to be perceived as empathetic by human listeners. This model accounts for characteristics such as pitch, as well as speech rate and intensity. They then produced a synthesized voice that matched the emotional qualities they identified.

First, James and her colleagues tested the hypothesis that a robot's voice can impact how users perceive it by conducting a simple experiment using a robot called Healthbot. The robot's voice was that of a professional voice artist, who was recorded while reading dialogs in two tone variations: a flat monotone and an empathetic voice.

The researchers recruited 120 participants and asked them to share their perceptions after they had watched videos of Healthbot talking with these two different voices. The vast majority of participants said that they perceived the robot as more empathetic when it spoke using the more empathetic voice. These initial results encouraged the researchers to explore the possibility of producing a synthetic voice that reproduced the empathetic tone used by the professional voice artist.

"Our study had two key objectives," James said. "One was to determine what type of synthesized voice is best for creating a health care robot that is perceived as empathetic. Once we identified it, we tried to use speech synthesis techniques to produce this voice."

When they analyzed the findings of a short pilot study, the researchers realized that the emotions that most influenced whether a human user perceived a voice as empathetic or not were not the primary emotions (i.e., anger, sadness, joy and fear), but more subtle, secondary emotions. These are complex emotions that are often conveyed by the tone of voice of human speakers, which could be, for instance, apologetic, anxious, confident, enthusiastic or worried. This realization inspired James and her colleagues to compile a new dataset of speech recordings conveying these secondary emotions, called JLCorpus.

By analyzing speech samples from this dataset, the team was able to produce a model that outlined the emotional qualities that a synthesized voice should have to be perceived as empathetic by human listeners. This model accounts for characteristics such as pitch, as well as speech rate and intensity. They then produced a synthesized voice that matched the emotional qualities they identified.

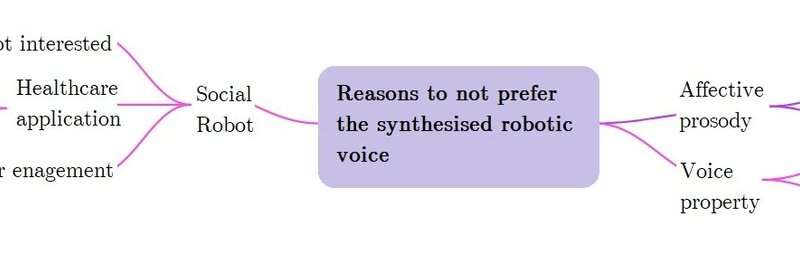

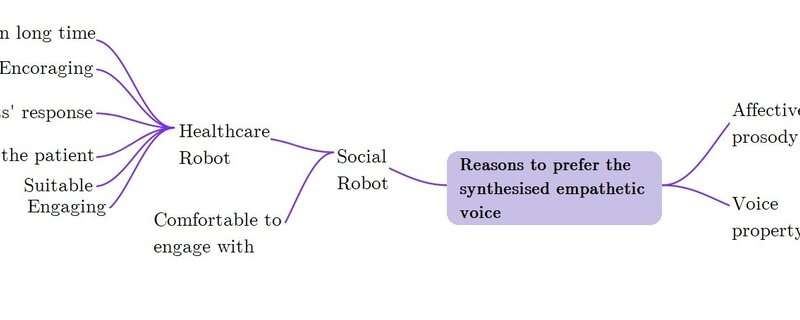

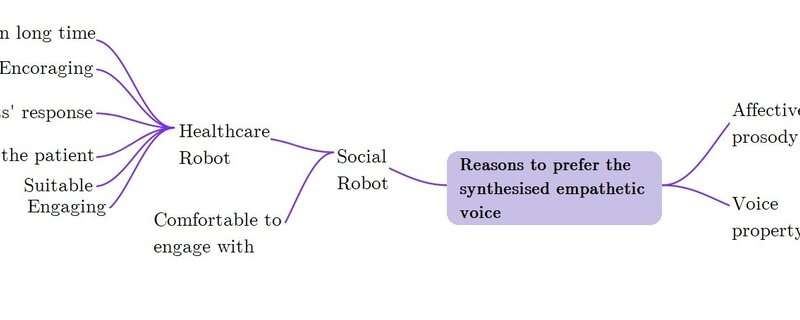

Mind map of reasons why participants did not prefer the robotic voice without empathetic emotions, based on the responses given by participants in the perception test. Credit: James et al.

Subsequently, James and her colleagues carried out a second perception test, during which users viewed videos of Healthbot speaking with the synthetized voice they had created and shared their perceptions of the robot.

"In this second perception test, participants saw a video of the health care robot speaking with the synthesized voice and rated the perceived empathy on a five-point scale based on the Motivational Interviewing Treatment Integrity (MITI) module," James said. "This is a five-point scale used to rate a clinician's empathy, and the same scale was used here with modifications done to suit the health care robot."

In the videos that the researchers showed participants, Healthbot had a neutral facial expression and its speech was not accompanied by any particular hand gestures. This means that it could only convey emotions and empathy via its voice. The vast majority of those who took part in the second test said that they perceived the robot as highly empathetic, rating it high on the five-point scale MITI module.

"Our findings suggest that people can perceive empathy from voice alone, without any supporting facial expressions or gestures," James said. "This implies that a robot's voice plays a key role in how humans perceive it. This voice is not just a medium of communicating with humans; it can actually impact their perceptions."

In addition to highlighting the important role that a robot's voice plays in how humans perceive it, the recent study carried out by James and her colleagues shows that subtle, secondary emotions are what ultimately make a voice sound more empathetic. These findings could pave the way towards new studies exploring secondary emotions, which have so far been seldomly investigated.

Subsequently, James and her colleagues carried out a second perception test, during which users viewed videos of Healthbot speaking with the synthetized voice they had created and shared their perceptions of the robot.

"In this second perception test, participants saw a video of the health care robot speaking with the synthesized voice and rated the perceived empathy on a five-point scale based on the Motivational Interviewing Treatment Integrity (MITI) module," James said. "This is a five-point scale used to rate a clinician's empathy, and the same scale was used here with modifications done to suit the health care robot."

In the videos that the researchers showed participants, Healthbot had a neutral facial expression and its speech was not accompanied by any particular hand gestures. This means that it could only convey emotions and empathy via its voice. The vast majority of those who took part in the second test said that they perceived the robot as highly empathetic, rating it high on the five-point scale MITI module.

"Our findings suggest that people can perceive empathy from voice alone, without any supporting facial expressions or gestures," James said. "This implies that a robot's voice plays a key role in how humans perceive it. This voice is not just a medium of communicating with humans; it can actually impact their perceptions."

In addition to highlighting the important role that a robot's voice plays in how humans perceive it, the recent study carried out by James and her colleagues shows that subtle, secondary emotions are what ultimately make a voice sound more empathetic. These findings could pave the way towards new studies exploring secondary emotions, which have so far been seldomly investigated.

Mind map of reasons why participants did not prefer the robotic voice without empathetic emotions, based on the responses given by participants in the perception test. Credit: James et al.

"These emotions are subtle in nature, can be culture-specific and are sometimes difficult to define and reproduce, but this is the exciting part about analyzing them," James said. "We are not exactly sure about what we could find and that makes it all the more interesting. There is a lack of resources and databases to study secondary emotions, so developing these resources would be the next step forward."

In the future, the synthesized voice produced by this team of researchers could be used to create health care robots that sound more empathic and human-like. Meanwhile, the researchers plan to continue exploring how humans convey subtle secondary emotions in speech, so that they can synthesize robot voices that are increasingly convincing and empathetic. They would also like to develop emotion recognition models that can automatically detect these emotions in the voice of human speakers.

"So far, we carried out perception tests using a video of the robot speaking to participants," James said. "We are now conducting perception tests in such a way that participants can actually sit near a physical robot and interact with it. We expect that the presence of the robot near the participants will impact their perception of the robot further."

Explore further Robots could learn to recognise human emotions, study finds

"These emotions are subtle in nature, can be culture-specific and are sometimes difficult to define and reproduce, but this is the exciting part about analyzing them," James said. "We are not exactly sure about what we could find and that makes it all the more interesting. There is a lack of resources and databases to study secondary emotions, so developing these resources would be the next step forward."

In the future, the synthesized voice produced by this team of researchers could be used to create health care robots that sound more empathic and human-like. Meanwhile, the researchers plan to continue exploring how humans convey subtle secondary emotions in speech, so that they can synthesize robot voices that are increasingly convincing and empathetic. They would also like to develop emotion recognition models that can automatically detect these emotions in the voice of human speakers.

"So far, we carried out perception tests using a video of the robot speaking to participants," James said. "We are now conducting perception tests in such a way that participants can actually sit near a physical robot and interact with it. We expect that the presence of the robot near the participants will impact their perception of the robot further."

Explore further Robots could learn to recognise human emotions, study finds

More information: Empathetic speech synthesis and testing for healthcare robots. International Journal of Social Robotics(2020). DOI: 10.1007/s12369-020-00691-4.

Emotional speech corpus: github.com/tli725/JL-Corpus

© 2020 Science X Network

No comments:

Post a Comment