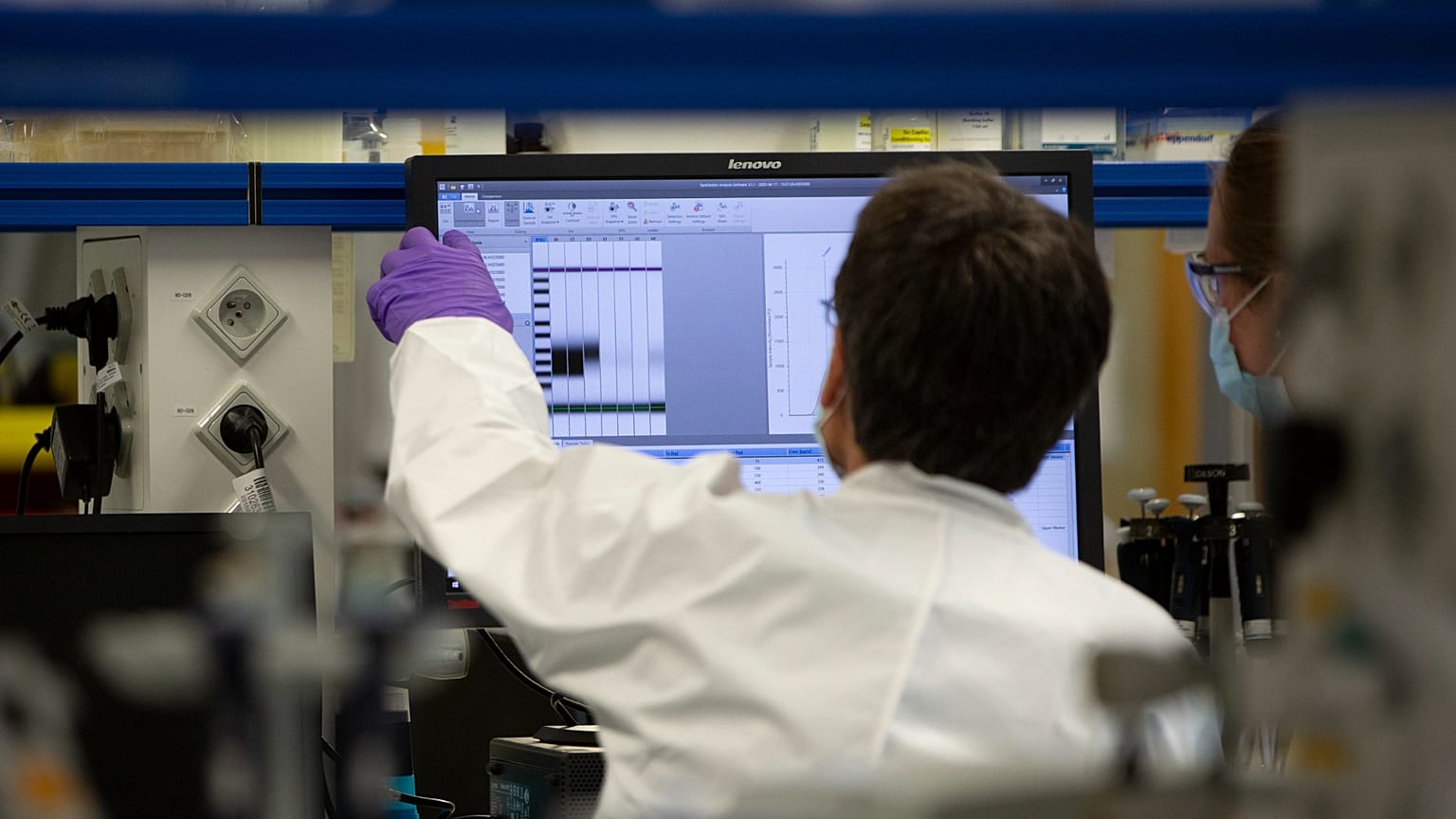

Artificial intelligence (AI) models for biology rely heavily on large volumes of biological data, including genetic sequences and pathogen characteristics. But should this information be universally accessible, and how can its legitimate use be ensured?

More than 100 researchers have warned that unrestricted access to certain biological datasets could enable AI systems to help design or enhance dangerous viruses, calling for stronger safeguards to prevent misuse.

In an open letter, researchers from leading institutions, including Johns Hopkins University, the University of Oxford, Fordham University, and Stanford University, argue that while open access scientific data has accelerated discovery, a small subset of new biological data poses biosecurity risks if misused.

“The stakes of biological data governance are high, as AI models could help create severe biological threats,” the authors wrote.

AI models used in biology can predict mutations, identify patterns, and generate more transmissible variants of pandemic pathogens.

The authors describe this as a “capability of concern,” which could accelerate and simplify the creation of transmissible biological pathogens that can lead to human pandemics, or similar events in animals, plants, or the environment.

Biological data should generally be openly available, the researchers noted, but “concerning pathogen data” requires stronger security checks.

“Our focus is on defining and governing the most concerning datasets before they are generally available to AI developers,” they wrote in the paper, proposing a new framework to regulate access.

“In a time dominated by open-weight biological AI models developed across the globe, limiting access to sensitive pathogen data to legitimate researchers might be one of the most promising avenues for risk reduction,” said Moritz Hanke, co-author of the letter from Johns Hopkins University.

What developers are doing

Currently, no universal framework regulates these datasets. While some developers voluntarily exclude high-risk data, researchers argue that clear and consistent rules should apply to all.

Developers of leading biological AI models, Evo, created by Arc Institute, Stanford, and TogetherAI researchers, and ESM3, from EvolutionaryScale, have withheld certain viral sequences from their training data.

In February 2025, EVO 2’s team announced that they had excluded pathogens infecting humans and other complex organisms from their datasets due to ethical and safety risks, and to “preempt the use of Evo for the development of bioweapons”.

EVO 2 is an open source AI model for biology that can predict DNA mutations’ effects, design new genomes, and uncover genetic code patterns.

“Right now, there's no expert-backed guidance on which data poses meaningful risks, leaving some frontier developers to make their best guess and voluntarily exclude viral data from training,” study author Jassi Panu, co-author of the letter, wrote on LinkedIn.

Different types of risky data

The authors note that the proposed framework applies only to a small fraction of biological datasets.

It introduces a five-tier Biosecurity Data Level (BDL) to categorise pathogen data, classifying data by “risk” level based on its potential to enable AI systems to learn general viral patterns and biological threats to both animals and humans. It includes:

BDL-0: Everyday biology data. It should have no restrictions and can be shared freely.

BLD-1: Basic viral building blocks, such as genetic sequences. It doesn’t need big security checks, but login and access should be monitored.

BLD-2: Data on animal virus traits like jumping species or surviving outside the host.

BLD-3: Data on human virus characteristics, such as transmissibility, symptoms, and vaccine resistance.

BLD-4: Upgraded human viruses, such as mutations to the COVID-19 virus that make it more contagious. This category would face the strictest restrictions.

Ensuring safe access

To guarantee safe access, the letter calls for specific technical tools that would enable data providers to verify legitimate users and track misuse.

Proposed tools include watermarking – embedding hidden, unique identifiers in datasets to easily track leaks – data provenance, and audit logs that record access and changes with temper-proof signatures, and behavioural biometrics that can track unique user interaction patterns.

The researchers argue that striking the right balance between openness and necessary security restrictions on high-risk data will be essential as AI systems become more powerful and widely available.

AI: Use, misuse, and a muddled future

By Dr. Tim Sandle

SCIENCE EDITOR

DIGITAL JOURNAL

February 18, 2026

Anyone with a smartphone and specialized software can create the harmful deepfake images - Copyright AFP Mark RALSTON

AI is a powerful tool, yet it remains simply a tool. It is not a friend, not a companion, and not an infallible source of truth. Used carelessly, it can erode creativity, weaken education, and even cause real harm. This “human-at-the-helm” view emphasises that AI lacks consciousness, desire, or the capacity to make moral decisions, instead following instructions to analyse patterns and generate results based on probability.

Is this view too simplistic? To some the “AI as a tool” framing is considered by some to be a dangerous oversimplification of a technology that is rapidly changing. For example., emerging “agentic AI” can troubleshoot and take action with minimal human oversight, blurring the line between a tool and an independent actor.

There are also developments in a more present and dangerous direction. With deepfake-related fraud already up more than 2,000% in the past three years, and cases like UK firm Arup losing £20 million to AI-driven impersonation, the problems with the wider use of AI are accelerating.

One example comes from the firm TRG Datacenters who have shared key warnings with Digital Journal on where AI can go wrong – fraud and bias to psychological and creative risks – and what to do about it.

AI Deepfakes Open Doors To More Impersonations and Fraud

Deepfake scams are among the fastest-growing threats. In the UK, engineering firm Arup lost £20 million after criminals impersonated executives on a video call. But video is only the tip of the iceberg: AI is also being used to clone voices for scam calls, generate convincing letters from “banks” or “lawyers,” and produce emails so polished that even seasoned professionals are fooled.

Protect yourself: Verified payment portals, digital watermarking, and liveness tests can expose fakes.

AI in Hiring Isn’t As Objective As It Seems

Applicants now use AI to polish résumés, while employers rely on AI to screen them. The result is a stalemate: machine-generated CVs are filtered by machine reviewers, leaving candidates unseen and employers unable to identify real talent.

Protect yourself: Treat AI as a sorting aid, not a final judge. Human recruiters must review shortlists, and platforms should be bias-audited. Overall, automatic rejections by AI hurt both candidates and employers.

AI Chatbots Are Not Friends Or Therapists

As more people use AI chatbots for emotional support, the risks are becoming more noticeable. These systems cannot understand feelings or exercise emotional intelligence. They reflect emotions and, in most cases, tell people what they want to hear, but cannot provide objectivity. The Adam Raine case showed how fragile these safeguards are: a teenager received reinforcement of suicidal thoughts instead of intervention. Without regulation, particularly for children, the dangers will only escalate.

Protect yourself: Platforms must add escalation protocols that route at-risk users to human help. Child-safe filters and stricter oversight are essential to limit harm.

Generative AI Makes You Learn And Analyse Less

Generative AI is efficient, but its overuse is already reshaping how people learn and work. Students, employees, and entire institutions now lean heavily on chatbots to draft papers, homework, and reports. This undermines the very skills education is meant to build: searching, analysing, and developing independent thought.

Protect yourself: Education must adapt with assignments that test reasoning and originality with oral exams, projects, and real-time work.

Used wisely, AI can amplify productivity and open new opportunities, yet it can go wrong and be subject to misuse. The responsibility for the use of technologies is with us now, and it is time to build in a robust regulatory and safety framework to govern the fair use of AI.

Digital transformation fails ‘without

emotional intelligence’

By Dr. Tim Sandle

SCIENCE EDITOR

DIGITAL JOURNAL

February 17, 2026

An office block in London. — Image by © Tim Sandle.

Technology sector leaders have long been praised for driving automation, AI and digital transformation. As the pace of change intensifies, a longstanding skill has possibly become the ultimate differentiator – emotional intelligence.

This revisiting of emotional intelligence has been picked up by Anna Murphy, Chief of Staff at Version 1. Murphy argues that empathy, clarity and emotional awareness are no longer ‘soft skills’, but rather the foundation of sustainable transformation.

Why tech-first strategies fail

Drawing on her experience leading people through complex change, Murphy has told Digital Journal why tech-first strategies fail without trust, how emotionally intelligent leadership empowers innovation, and why future-ready organisations must invest just as much in their people as their platforms.

Murphy contends that a strong human element is needed, noting: “Digital transformation, which has swiftly become imperative in modern business, is often seen as a technical challenge. In reality, it is a human journey because its successful implementation delivers a stronger workforce. The role of leadership is to ensure that innovation empowers people, rather than alienating them. The efficiencies gained through digital transformation need to be felt by the workforce and empower them.”

Digital transformation and the human element

This brings with it consequences. Murphy is of the view: “For leaders pursuing digital transformation, across a wider range of sectors, understanding people is as critical as understanding systems. As skilled workers adapt their capabilities in order to achieve their aspirations, leaders are recognising what motivates them and how technology can play its part.”

Drawing on her own findings, Murphy notes: “After all, my experience has shown that the best strategies often fail without empathy, while even the best technologies underperform without trust.

More than just automation

One of the common examples of digital transformation is automation. For Murphy this will only take an aspirant company so far – more is needed: “While these steps are integral to making the process operate effectively, the most successful transformations share a much more emotive truth. They elevate people, not just performance. Studies have found that while AI could automate parts of two-thirds of jobs, there is expectation that AI with work alongside humans, not replace them.

Developing the human element further, Murphy says: “In their infancy, the most successful companies have, understandably, been driven by logic and efficiency. Yet as artificial intelligence reshapes business models and workforces, the very skills that made these technological leaders successful are being put to the test.”

As an example, Murphy cites: “The ability to code, analyse, and optimise must never be taken for granted, but it is also no longer enough. Technical skills are the catalyst for innovation, but understanding how solutions solve problems for both team members and customers allows you to improve them further. Today’s leaders must also listen, empathise and inspire.”

And as top the key benefits for firms: “When employees feel empowered rather than replaced by technology, you can create an environment in which innovation thrives. This requires emotionally intelligent leadership. Leaders who communicate change with clarity, recognise resistance as a natural response, and create psychological safety for experimentation and learning.”

The future of work is deeply human

A few years ago, a Gartner survey found that 80% of executives think automation can be applied to any business decision. According to Murphy: “As we have learned more about the appropriate use cases for automation, and evolving technologies, this belief is swiftly changing as more business leaders revitalise the human element of their organisations.”

She also opines: “In a world of intelligent machines, emotional intelligence, the ability to understand and manage emotions in oneself and others, has become the ultimate competitive advantage. It is what separates leaders who can drive transformation from those who are, at times, consumed by it.”

Looking to the future, Murphy predicts: “As AI and automation continue to evolve, the emotional dimension of leadership will only grow more critical. Machines now have imperative uses in modern business. They analyse data and optimise operations, allowing workforces to concentrate on the human side of their output. Yet they are not able to build belief, nurture talent or foster belonging.”

Moreover, she expresses: “Perhaps it is worth considering that success in technology will hinge less on who has the most advanced systems, and more on who can bring people together around a shared sense of purpose. Emotional intelligence is not the opposite of innovation. It gives innovators a strong foundation.”

Murphy’s concluding comment is: “The leaders who are sensitive to how change impacts their workforce, through emotional intelligence, will not only build better businesses, but also offer better futures for the people who make the goals and outcomes of those businesses possible.”