A scalable reinforcement learning–based framework to facilitate the teleoperation of humanoid robots

The effective operation of robots from a distance, also known as teleoperation, could allow humans to complete a vast range of manual tasks remotely, including risky and complex procedures. Yet teleoperation could also be used to compile datasets of human motions, which could help to train humanoid robots on new tasks.

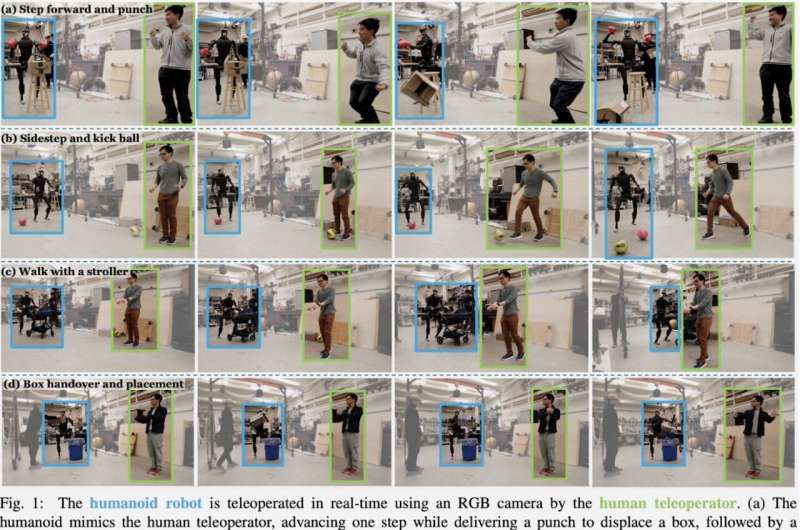

Researchers at Carnegie Mellon University recently developed Human2HumanOid (H2O), a method to enable the effective teleoperation of human-sized humanoid robots. This approach, introduced in a paper posted to the arXiv preprint server, could enable the training of humanoid robots on manual tasks that require specific sets of movements, including playing various sports, pushing a trolley or stroller, and moving boxes.

"Many people believe that 2024 is the year of humanoid, largely because the embodiment alignment between humans and humanoids allows for a seamless integration of human cognitive skills with versatile humanoid capabilities," Guanya Shi, co-author of the paper, told Tech Xplore.

"Yet before such an exciting integration, we need to first create an interface between human and humanoid for data collection and algorithm development. Our work H2O (Human2HumanOid) takes the first step, introducing a real-time whole-body teleoperation system using just an RGB camera, which allows a human to precisely teleoperate a humanoid in many real-world tasks."

The recent work by these researchers facilitates the teleoperation of full-sized humanoid robots in real time. In contrast with many other methods introduced in previous studies, H2O only relies on an RGB camera, which facilitates its up-scaling and widespread use.

"We believe that human teleoperation will be essential for scaling up the data flywheel for humanoid robots, and making teleoperation accessible and easy to do is our main objective," Tairan He, co-author of the paper, told Tech Xplore. "Inspired by prior works that tackled parts of this challenge—like physics-based animation of human motions, transferring human motions to real-world humanoids, and teleoperation of humanoids—this study aims to amalgamate these components into a single framework."

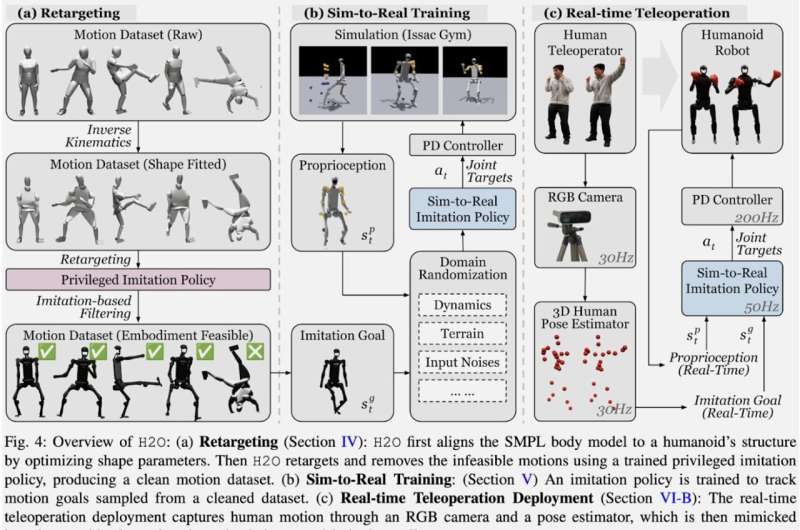

H2O is a scalable and efficient method that allows researchers to compile large datasets of human motions and retarget these motions to humanoid robots, so that humans can teleoperate them in real time, reproducing all their body movements on the robot. Achieving the full-body teleoperation of robots in real-time is a challenging task, as the bodies of humanoid robots do not always allow them to replicate human motions involving different limbs and existing model-based controllers do not always produce realistic movements in robots.

"H2O teleoperation is a framework based on reinforcement learning (RL) that facilitates the real-time whole-body teleoperation of humanoid robots using just an RGB camera," He explained. "The process starts by retargeting human motions to humanoid capabilities through a novel 'sim-to-data' methodology, ensuring the motions are feasible for the humanoid's physical constraints. This refined motion dataset then trains an RL-based motion imitator in simulation, which is subsequently transferred to the real robot without further adjustment."

The method developed by Shi, He and their colleagues has numerous advantages. The researchers showed that despite its minimal hardware requirements, it allows robots to perform a wide array of dynamic whole-body motions in real time.

The input footage used to teleoperate robots is collected using a standard RGB camera. The system's other components include a retargeting algorithm, a method to clean human motion data in simulations (ensuring that motions can be effectively replicated in robots) and a reinforcement learning-based model that learns new teleoperation policies.

"The most notable achievement of our study is the successful demonstration of learning-based, real-time whole-body humanoid teleoperation, a first of its kind to the best of our knowledge," He said. "This demonstration opens new avenues for humanoid robot applications in environments where human presence is risky or impractical."

The researchers demonstrated the feasibility of their approach in a series of real-world tests, where they teleoperated a humanoid robot and successfully reproduced various motions, including displacing a box, kicking a ball, pushing a stroller and catching a box and dropping it into a waste bin.

The H2O framework could soon be used to replicate other motions and train robots on numerous real-world tasks, ranging from household chores to maintenance tasks, providing medical assistance, and even rescuing humans from dangerous locations. As it only requires an RGB camera, this new method could be realistically implemented in a wide range of settings.

"The 'sim-to-data' process and the RL-based control strategy could also influence future developments in robot teleoperation and motion imitation," He said. "Our future research will focus on improving and expanding the capabilities of humanoid teleoperation. Key areas include enhancing the fidelity of motion retargeting to cover a broader range of human activities, addressing the sim-to-real gap more effectively and exploring ways to incorporate feedback from the robot to the operator to create a more immersive teleoperation experience."

In their next studies, Shi, He and their collaborators plan to advance their system further. For instance, they would like to enhance its performance in complex, unstructured and unpredictable scenarios, as this could simplify its real-world deployment.

"We also plan to extend the framework to include manipulation with dexterous hands and gradually improve the level of autonomy of the robot to finally achieve efficient, safe, and dexterous human-robot collaboration," Changliu Liu added

More information: Tairan He et al, Learning Human-to-Humanoid Real-Time Whole-Body Teleoperation, arXiv (2024). DOI: 10.48550/arxiv.2403.04436

© 2024 Science X Network

No comments:

Post a Comment